Elon Musk and Sam Altman claim that 2026 will be the year of the AI singularity – the point at which it becomes irreversible and uncontrollable

“The technological singularity is a theoretical scenario in which technological growth becomes uncontrollable and irreversible, culminating in profound and unpredictable changes to human civilization.” – IBM

Transhumanists and technocrats Elon Musk and Sam Altman believe that 2026 will be the year in which AI reaches the so-called moment of “singularity”.

Musk wrote in two recent posts: “We have entered the singularity” and “2026 is the year of the singularity.” Just over a year ago, he said we were at the “event horizon of the singularity.”

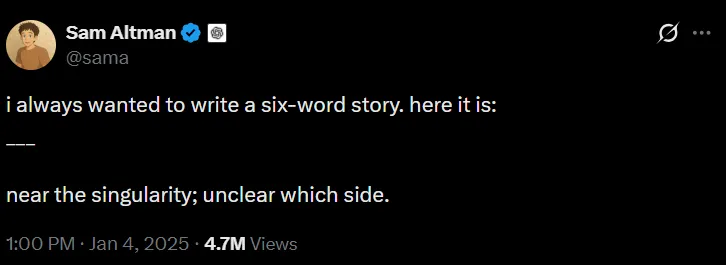

One year ago, Sam Altman declared that the singularity was about to be reached.

Again, according to IBM:

“The technological singularity is a theoretical scenario in which technological growth becomes uncontrollable and irreversible, culminating in profound and unpredictable changes to human civilization.”

Theoretically, this phenomenon is driven by the emergence of artificial intelligence (AI), which surpasses human cognitive abilities and can continuously improve itself. The term “singularity,” derived from mathematical concepts, refers to a point at which existing models break down and the continuity of understanding is lost. It describes an era in which machines not only reach human intelligence but significantly surpass it, setting in motion a self-reinforcing cycle of technological evolution.

“The theory states that such advances could develop at such a rapid pace that humans would be unable to foresee, contain, or stop the process. This rapid development could lead to the emergence of synthetic intelligences that are not only autonomous but also capable of innovations beyond human comprehension or control. The possibility of machines creating even more advanced versions of themselves could propel humanity into a new reality where humans are no longer the most capable beings. The consequences of reaching this singularity point could be either positive or catastrophic for humanity. Currently relegated to the realm of science fiction, it can nevertheless be valuable to imagine what such a future might look like so that humanity can guide AI development in a way that serves the interests of civilization.” (Popular Mechanics)

Will this be the year in which this extremely dangerous situation – of which IT giants have already warned us – occurs?

Or is this nothing more than typical exaggeration by tech nerds?

Or is this coded language for: “The AI bubble is about to burst, and all the serfs will pay for it – while we sip cocktails on Epstein Island”?

yogaesoteric

February 16, 2026