‘Our minds can be hijacked’: the tech insiders who fear a smartphone dystopia (1)

Justin Rosenstein had tweaked his laptop’s operating system to block Reddit, banned himself from Snapchat, which he compares to heroin, and imposed limits on his use of Facebook. But even that wasn’t enough. In August 2017, the 34-year-old tech executive took a more radical step to restrict his use of social media and other addictive technologies.

Rosenstein purchased a new iPhone and instructed his assistant to set up a parental-control feature to prevent him from downloading any apps.

He was particularly aware of the allure of Facebook “likes”, which he describes as “bright dings of pseudo-pleasure” that can be as hollow as they are seductive. And Rosenstein should know: he was the Facebook engineer who created the “like” button in the first place.

A decade after he stayed up all night coding a prototype of what was then called an “awesome” button, Rosenstein belongs to a small but growing band of Silicon Valley heretics who complain about the rise of the so-called “attention economy”: an internet shaped around the demands of an advertising economy.

These “refuseniks” are rarely founders or chief executives, who have little incentive to deviate from the motto that their companies are “making the world a better place”. Instead, they tend to have worked a rung or two down the corporate ladder: designers, engineers and product managers who, like Rosenstein, several years ago put in place the building blocks of a digital world from which they are now trying to disentangle themselves. “It is very common” Rosenstein says “for humans to develop things with the best of intentions and for them to have unintended, negative consequences.”

Rosenstein, who also helped create Gchat during a stint at Google, and now leads a San Francisco-based company that improves office productivity, appears most concerned about the psychological effects on people who, research shows, touch, swipe or tap their phone 2,617 times a day.

There is growing concern that as well as addicting users, technology is contributing toward so-called “continuous partial attention”, severely limiting people’s ability to focus, and possibly lowering IQ. One recent study showed that the mere presence of smartphones damages cognitive capacity – even when the device is turned off. “Everyone is distracted”, Rosenstein says. “All of the time.”

But those concerns are trivial compared with the devastating impact upon the political system that some of Rosenstein’s peers believe can be attributed to the rise of social media and the attention-based market that drives it.

Drawing a straight line between addiction to social media and political earthquakes like Brexit and the rise of Donald Trump, they contend that digital forces have completely upended the political system and, left unchecked, could even render democracy as we know it obsolete.

In 2007, Rosenstein was one of a small group of Facebook employees who decided to create a path of least resistance – a single click – to “send little bits of positivity” across the platform. Facebook’s “like” feature was, Rosenstein says, “wildly” successful: engagement soared as people enjoyed the short-term boost they got from giving or receiving social affirmation, while Facebook harvested valuable data about the preferences of users that could be sold to advertisers. The idea was soon copied by Twitter, with its heart-shaped “likes” (previously star-shaped “favourites”), Instagram, and countless other apps and websites.

It was Rosenstein’s colleague, Leah Pearlman, then a product manager at Facebook and on the team that created the Facebook “like”, who announced the feature in a 2009 blogpost. Now 35 and an illustrator, Pearlman confirmed via email that she, too, has grown disaffected with Facebook “likes” and other addictive feedback loops. She has installed a web browser plug-in to eradicate her Facebook news feed, and hired a social media manager to monitor her Facebook page so that she doesn’t have to.

“One reason I think it is particularly important for us to talk about this now is that we may be the last generation that can remember life before [modern technology]” Rosenstein says. It may or may not be relevant that Rosenstein, Pearlman and most of the tech insiders questioning today’s attention economy are in their 30s, members of the last generation that can remember a world in which telephones were plugged into walls.

It is revealing that many of these younger technologists are weaning themselves off their own products, sending their children to elite Silicon Valley schools where iPhones, iPads and even laptops are banned. They appear to be abiding by a Biggie Smalls lyric from their own youth about the perils of dealing crack cocaine: never get high on your own supply.

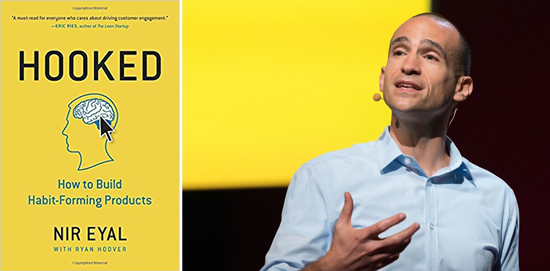

One morning in April 2017, designers, programmers and tech entrepreneurs from across the world gathered at a conference centre on the shore of the San Francisco Bay. They had each paid up to $1,700 to learn how to manipulate people into habitual use of their products, on a course curated by conference organizer Nir Eyal.

Eyal, 39, the author of Hooked: How to Build Habit-Forming Products, has spent several years consulting for the tech industry, teaching techniques he developed by closely studying how the Silicon Valley giants operate. “The technologies we use have turned into compulsions, if not full-fledged addictions” Eyal writes. “It’s the impulse to check a message notification. It’s the pull to visit YouTube, Facebook, or Twitter for just a few minutes, only to find yourself still tapping and scrolling an hour later.” None of this is an accident, he writes. It is all “just as their designers intended”.

He explains the subtle psychological tricks that can be used to make people develop habits, such as varying the rewards people receive to create “a craving”, or exploiting negative emotions that can act as “triggers”. “Feelings of boredom, loneliness, frustration, confusion and indecisiveness often instigate a slight pain or irritation and prompt an almost instantaneous and often mindless action to quell the negative sensation”, Eyal writes.

Attendees of the 2017 Habit Summit might have been surprised when Eyal walked on stage to announce that the keynote speech was about “something a little different”. He wanted to address the growing concern that technological manipulation was somehow harmful or immoral. He told his audience that they should be careful not to abuse persuasive design, and wary of crossing a line into coercion.

But he was defensive of the techniques he teaches, and dismissive of those who compare tech addiction to drugs. “We’re not freebasing Facebook and injecting Instagram here” he said. He flashed up a slide of a shelf filled with sugary baked goods. “Just as we shouldn’t blame the baker for making such delicious treats, we can’t blame tech makers for making their products so good we want to use them” he said. “Of course that’s what tech companies will do. And frankly: do we want it any other way?”

Without irony, Eyal finished his talk with some personal tips for resisting the lure of technology. He told his audience he uses a Chrome extension, called DF YouTube, “which scrubs out a lot of those external triggers” he writes about in his book, and recommended an app called Pocket Points that “rewards you for staying off your phone when you need to focus”.

Finally, Eyal confided the lengths he goes to protect his own family. He has installed in his house an outlet timer connected to a router that cuts off access to the internet at a set time every day. “The idea is to remember that we are not powerless”, he said. “We are in control.”

But are we? If the people who built these technologies are taking such radical steps to wean themselves free, can the rest of us reasonably be expected to exercise our free will?

Not according to Tristan Harris, a 33-year-old former Google employee turned vocal critic of the tech industry. “All of us are jacked into this system” he says. “All of our minds can be hijacked. Our choices are not as free as we think they are.”

Harris, who has been branded “the closest thing Silicon Valley has to a conscience”, insists that billions of people have little choice over whether they use these now ubiquitous technologies, and are largely unaware of the invisible ways in which a small number of people in Silicon Valley are shaping their lives.

A graduate of Stanford University, Harris studied under BJ Fogg, a behavioural psychologist revered in tech circles for mastering the ways technological design can be used to persuade people. Many of his students, including Eyal, have gone on to prosperous careers in Silicon Valley.

Harris is the student who went rogue; a whistleblower of sorts, he is lifting the curtain on the vast powers accumulated by technology companies and the ways they are using that influence. “A handful of people, working at a handful of technology companies, through their choices will steer what a billion people are thinking today” he said at a TED Talk in Vancouver.

“I don’t know a more urgent problem than this” Harris says. “It’s changing our democracy, and it’s changing our ability to have the conversations and relationships that we want with each other.” Harris went public – giving talks, writing papers, meeting lawmakers and campaigning for reform after three years struggling to effect change inside Google’s Mountain View headquarters.

It all began in 2013, when he was working as a product manager at Google, and circulated a thought-provoking memo, A Call To Minimize Distraction & Respect Users’ Attention, to 10 close colleagues. It struck a chord, spreading to some 5,000 Google employees, including senior executives who rewarded Harris with an impressive-sounding new job: he was to be Google’s in-house design ethicist and product philosopher.

Looking back, Harris sees that he was promoted into a marginal role. “I didn’t have a social support structure at all” he says. Still, he adds: “I got to sit in a corner and think and read and understand.”

He explored how LinkedIn exploits a need for social reciprocity to widen its network; how YouTube and Netflix autoplay videos and next episodes, depriving users of a choice about whether or not they want to keep watching; how Snapchat created its addictive Snapstreaks feature, encouraging near-constant communication between its mostly teenage users.

The techniques these companies use are not always generic: they can be algorithmically tailored to each person. An internal Facebook report leaked in 2017, for example, revealed that the company can identify when teens feel “insecure”, “worthless” and “need a confidence boost”. Such granular information, Harris adds, is “a perfect model of what buttons you can push in a particular person”.

Tech companies can exploit such vulnerabilities to keep people hooked; manipulating, for example, when people receive “likes” for their posts, ensuring they arrive when an individual is likely to feel vulnerable, or in need of approval, or maybe just bored. And the very same techniques can be sold to the highest bidder. “There’s no ethics” he says. A company paying Facebook to use its levers of persuasion could be a car business targeting tailored advertisements to different types of users who want a new vehicle. Or it could be a Moscow-based troll farm seeking to turn voters in a swing county in Wisconsin.

Harris believes that tech companies never deliberately set out to make their products addictive. They were responding to the incentives of an advertising economy, experimenting with techniques that might capture people’s attention, even stumbling across highly effective design by accident.

A friend at Facebook told Harris that designers initially decided the notification icon, which alerts people to new activity such as “friend requests” or “likes”, should be blue. It fit Facebook’s style and, the thinking went, would appear “subtle and innocuous”. “But no one used it” Harris says. “Then they switched it to red and of course everyone used it.”

That red icon is now everywhere. When smartphone users glance at their phones, dozens or hundreds of times a day, they are confronted with small red dots beside their apps, pleading to be tapped. “Red is a trigger colour” Harris says. “That’s why it is used as an alarm signal.”

The most seductive design, Harris explains, exploits the same psychological susceptibility that makes gambling so compulsive: variable rewards. When we tap those apps with red icons, we don’t know whether we’ll discover an interesting email, an avalanche of “likes”, or nothing at all. It is the possibility of disappointment that makes it so compulsive.

It’s this that explains how the pull-to-refresh mechanism, whereby users swipe down, pause and wait to see what content appears, rapidly became one of the most addictive and ubiquitous design features in modern technology. “Each time you’re swiping down, it’s like a slot machine” Harris says. “You don’t know what’s coming next. Sometimes it’s a beautiful photo. Sometimes it’s just an ad.”

Read the second part of the article

yogaestoteric

October 12, 2018