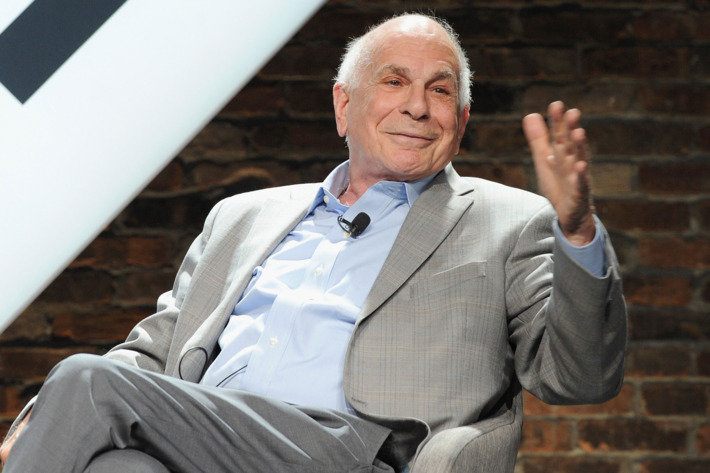

Remembering Daniel Kahneman: Seven theories that can help us understand how we think

The world of psychology has recently experienced a profound loss with the passing of Daniel Kahneman, a pioneering figure whose work has reshaped our understanding of the human consciousness in many ways. In this article, we reflect on some of the ways his contributions can help one understand oneself better.

- Anchoring

Along with his long-time collaborator Amos Tversky, Kahneman developed the concept and demonstrated the effect of anchoring. This is a phenomenon whereby, when we encounter a number early in a decision process, even when that number is completely irrelevant, we can be influenced by it and reluctant to move too far away from it. This can significantly affect the decisions and estimates that we make.

For example, if you are negotiating the price of something and the seller starts with an unusually high price, you might find yourself negotiating down from that high number rather than considering the actual value of the object you’re buying. Even if you end up paying less than the initial price offered, you might still pay more than you could have because your perception was “anchored” by that first high number.

Researchers have even shown that random numbers can influence us, even when we know they are random.

Some techniques that may help mitigate this effect include:

- Consider-the-opposite strategy: reflect on reasons why the starting number could be wrong.

- Consult with third parties: Getting a second opinion from someone who is not affected by the anchor can provide a fresh perspective.

- Preparation with data: Before entering negotiations or making decisions, gather relevant factual data. Knowing the actual market value, historical data, or other objective metrics can help anchor your decisions to factual information rather than arbitrary starting points proposed by others.

- Prospect Theory

For a long time, the most widely-accepted view of how people make decisions under uncertainty was expected utility theory. This theory imagines that people are totally rational and, when faced with a decision, they weigh the benefits and risks of each possible outcome, considering how likely each outcome is to occur. Then they choose the option that, on average, offers the most benefit – even if it’s not guaranteed to work out every time.

However, Kahneman challenged this belief when he developed prospect theory. According to this newer theory, people do not always act in purely rational ways that maximize utility when making decisions involving uncertainty. Instead, they are heavily (and sometimes irrationally) influenced by the potential losses and gains (aka, the prospects) they perceive in making a decision.

One aspect of Prospect Theory posits that losses generally have a more significant emotional impact on people than an equivalent amount of gains – a phenomenon known as “loss aversion”.

For example: Imagine you invested $1,000 in a stock, and over a period, the value of that investment fluctuates. At one point, it reaches $1,500, which you get accustomed to, but later drops to $1,200. Although the value of your investment is currently at $1,200, providing a $200 gain from your initial investment, you might hesitate to sell because you’re focused on the $300 “loss” from the peak value of $1,500, rather than focusing on whether you think the stock is a good investment to own at $1200. The psychological impact of losing $300 from the peak may feel more significant than the $200 gain from the initial investment.

Some researchers propose alternative explanations for the behaviors attributed to loss aversion. For instance, they suggest that what appears as loss aversion could instead be explained by other factors such as risk sensitivity, changing wealth levels, or a general reluctance to accept any change from the status quo, but whatever the right explanation is, it’s undeniable that Kahneman’s theory has been hugely influential and has opened the door to a more nuanced view of decision-making.

- Focusing Illusion

According to Kahneman, “Nothing in life is as important as you think it is while you are thinking about it.” This pithy statement captures the essence of the focusing illusion, which occurs when you overvalue certain aspects of a situation because they are (or have recently been) the focus of your attention. For instance, Kahneman et al. found that, if you’re asked to think about your income, and then you are, immediately after, asked to make a judgment about your level of satisfaction with your life, you will give your income more weight in the calculation of life satisfaction.

This ambiguity in evaluation about life satisfaction raises another issue that Kahneman explored in his work. Is true happiness an immediate, moment-to-moment experience of feeling good, repeated again and again, or is it a more generalized, reflective judgment about one’s life overall? In other words, Kahneman asked us to reflect on whether happiness is found in the “experiencing self” or the “remembering self.”

- Peak-End Rule

This rule suggests that your memory of past events may be disproportionately influenced by two aspects:

- the most intense point (the peak), and

- the ending of the experience.

For example, if you went on a vacation that was generally enjoyable but ended with a stressful flight home, you might recall the vacation less enjoyable than it really was, because of the unpleasant ending. Conversely, you might remember a film that had an emotionally resonant high point and a good ending as being excellent even if you were bored during other scenes.

When you are reflecting on how well or poorly something has gone, it can be helpful to remember that we are biased by this rule. Was the experience good overall, or is your memory of it being good? These are not necessarily the same.

- Planning Fallacy

Another of Kahneman and Tversky’s discoveries is the planning fallacy: put simply, it’s the ingrained human tendency to underestimate the amounts of time and resources required to complete projects. This fallacy frequently leads to overly optimistic project timelines and budgets.

Why does this occur? One theory is that it’s because large projects almost always involve some unexpected delays, steps, and challenges. But because we can’t predict in advance what they will be, it’s easy to act as though they won’t occur.

- Availability Heuristic

Here’s a quick question for you. Which would you guess occurs more frequently in written English: words beginning with the letter K, or words with the letter K in the third position?

Kahenmen and Tversky (1973) found that around two-thirds of the students they asked incorrectly guessed that words beginning with K are more common than those with K in the third position (e.g. make, ask). To explain this, they proposed the availability heuristic.

Like all cognitive heuristics, the availability heuristic is a psychic shortcut or “rule of thumb” which allows us to generate an intuitive answer to a difficult question. When estimating which of two occurrences is more common, we intuitively feel that the one for which we can more easily bring examples is the more common one.

Because it’s easier to think of words starting with a given letter than with a letter in the third position, the availability heuristic gives the misleading intuition that words beginning with K are more common than those with K in the third position.

The availability heuristic sometimes produces reliable intuitions, and at other times it misleads. One area where research has shown this to be particularly relevant is risk perception. This heuristic can have significant effects on how much danger we believe to be posed by different kinds of hazards, simply because some vivid hazards (like terrorism) may be easier to recall than more common ones (deaths caused by slipping and falling). Sensational news media further exacerbates this effect.

We also have a tool to help you mitigate the effects of this heuristic.

- Representativeness Heuristic

This heuristic describes how you might misjudge the probability of an event by finding a similar known event and basing your judgment on their similarity, instead of on the underlying frequencies. For example, if you meet someone who is shy and likes reading, you might think it is more likely that they are a librarian than a farmer because these traits fit the stereotype of a librarian, despite the fact that there are many more people with these characteristics who are farmers than librarians. The reason there are so many more farmers with those characteristics than librarians is because the number of farmers (in 2023 there were around 1.89 million farmers in the U.S.) is so much more vast than the number of librarians (as of May 2021, there were around 127,790 librarians in the U.S.).

Given the fact that there are so many more farmers than librarians, it is more probable that a randomly selected person would be a farmer rather than a librarian, even if they also embody characteristics like shyness or a love of reading that are librarian stereotypes.

Reflecting on how this heuristic can lead us into error can encourage us to pay attention to something often neglected: base rates. Base rates are essentially the frequency of a particular characteristic or event in the general population. For example, if you’re trying to figure out whether someone’s occupation is more likely to be a librarian or a farmer, your guess should take into account how many librarians there are compared to farmers in the general population in addition to how likely the person is to have these characteristics if they are either profession.

Ignoring these base rates and focusing only on personal traits that match our stereotypes (like assuming someone who reads a lot is more likely to be a librarian than a farmer) can lead us to make incorrect assumptions. This is a common mistake we make under the influence of the representativeness heuristic, where our judgments are skewed by how much a person resembles our psychic image of a certain category, rather than considering the actual statistical likelihood of belonging to that category. By paying attention to base rates, we can make more informed and accurate decisions, reducing the risk of falling into the trap of our biases.

Daniel Kahneman (March 5, 1934 – March 27, 2024) was an Israeli-American cognitive scientist best-known for his work on the psychology of judgment and decision-making. He is also known for his work in behavioral economics, for which he was awarded the 2002 Nobel Memorial Prize in Economic Sciences together with Vernon L. Smith.

yogaesoteric

June 8, 2024