The brain can store nearly 10 times more data than previously thought, study confirms

Scientists harnessed a new method to precisely measure the amount of information the brain can store, and it could help advance our understanding of learning.

The brain may be able to hold nearly 10 times more information than previously thought, a new study confirms.

Similar to computers, the brain’s memory storage is measured in “bits,” and the number of bits it can hold rests on the connections between its neurons, known as synapses. Historically, scientists thought synapses came in a fairly limited number of sizes and strengths, and this in turn limited the brain’s storage capacity. However, this theory has been challenged in recent years — and the new study further backs the idea that the brain can hold about 10-fold more than once thought.

In the study, researchers developed a highly precise method to assess the strength of connections between neurons in part of a rat’s brain. These synapses form the basis of learning and memory, as brain cells communicate at these points and thus store and share information.

By better understanding how synapses strengthen and weaken, and by how much, the scientists more precisely quantified how much information these connections can store. The analysis, published April 23 in the journal Neural Computation, demonstrates how this new method could not only increase our understanding of learning but also of aging and diseases that erode connections in the brain.

“These approaches get at the heart of the information processing capacity of neural circuits,” Jai Yu, an assistant professor of neurophysiology at the University of Chicago who was not involved in the research, told Live Science in an email. “Being able to estimate how much information can potentially be represented is an important step towards understanding the capacity of the brain to perform complex computations.”

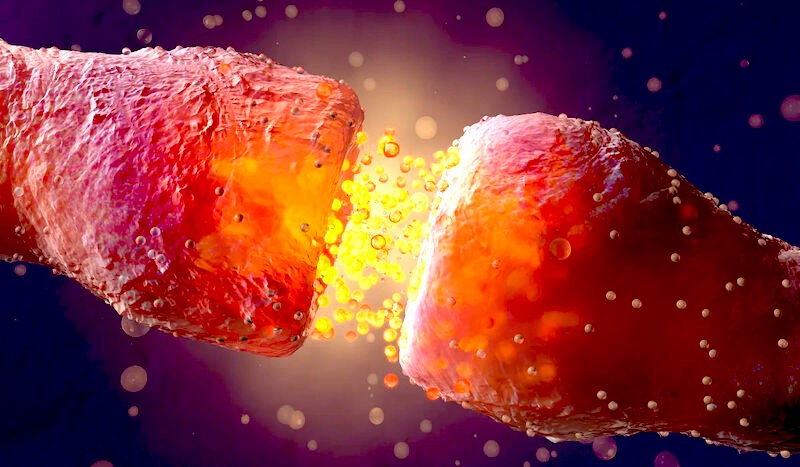

In the human brain, there are more than 100 trillion synapses between neurons. Chemical messengers are launched across these synapses, facilitating the transfer of information across the brain. As we learn, the transfer of information through specific synapses increases. This “strengthening” of synapses enables us to retain the new information. In general, synapses strengthen or weaken in response to how active their constituent neurons are — a phenomenon called synaptic plasticity.

However, as we age or develop neurological diseases, such as Alzheimer’s, our synapses become less active and thus weaken, reducing cognitive performance and our ability to store and retrieve memories.

Scientists can measure the strength of synapses by looking at their physical characteristics. Additionally, messages sent by one neuron will sometimes activate a pair of synapses, and scientists can use these pairs to study the precision of synaptic plasticity. In other words, given the same message, does each synapse in the pair strengthen or weaken in exactly the same way?

Measuring the precision of synaptic plasticity has proven difficult in the past, as has measuring how much information any given synapse can store. The new study transforms that.

To measure synaptic strength and plasticity, the team harnessed information theory, a mathematical way of understanding how information is transmitted through a system. This approach also enables scientists to quantify how much information can be transmitted across synapses, while also taking account of the “background noise” of the brain.

This transmitted information is measured in bits, such that a synapse with a higher number of bits can store more information than one with fewer bits, Terrence Sejnowski, co-senior study author and head of the Computational Neurobiology Laboratory at The Salk Institute for Biological Studies, told Live Science in an email. One bit corresponds to a synapse sending transmissions at two strengths, while two bits allows for four strengths, and so on.

The team analyzed pairs of synapses from a rat hippocampus, a region of the brain that plays a major role in learning and memory formation. These synapse pairs were neighbors and they activated in response to the same type and amount of brain signals. The team determined that, given the same input, these pairs strengthened or weakened by exactly the same amount — suggesting the brain is highly precise when adjusting a given synapse’s strength.

The analysis suggested that synapses in the hippocampus can store between 4.1 and 4.6 bits of information. The researchers had reached a similar conclusion in an earlier study of the rat brain, but at that time, they’d crunched the data with a less-precise method. The new study helps confirm what many neuroscientists now assume — that synapses carry much more than one bit each, Kevin Fox, a professor of neuroscience at Cardiff University in the U.K. who was not involved in the research, told Live Science in an email.

The findings are based on a very small area of the rat hippocampus, so it’s unclear how they’d scale to a whole rat or human brain. It would be interesting to determine how this capacity for information storage varies across the brain and between species, Yu said.

In the future, the team’s method could also be used to compare the storage capacity of different areas of the brain, Fox said. It could also be used to study a single area of the brain when it’s healthy and when it’s in a diseased state.

yogaesoteric

June 13, 2024