A.I. Is Monitoring You Right Now and Here’s How They’re Using Your Data

There’s wisdom in crowds, and scientists are using artificial intelligence and machine learning to predict political crisis and forecast the future.

We live in an age of mass surveillance. And big data, mixed with the ever increasing power of artificial intelligence, means all of our actions are being recorded and stored like never before. This information is being mined to identify social media trends for targeted advertising, but it’s also being used to predict riots, election outcomes, disease epidemics and that’s not to mention the vast networks of surveillance cameras that could be used to track crowds and individuals in real time.

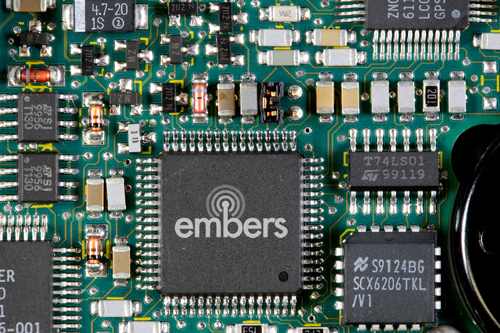

This is EMBERS. A computer forecasting system that sifts through the data that you post on social media everyday. It mines platforms you’d expect like Twitter and Facebook, and it tracks things that you might not expect – like restaurant apps, traffic maps, currency rates and even food prices. “Data from tweets and social media is useful for predicting both disease outbreaks, and events like civil unrest, domestic political crisis, elections, and so on.”

“You can use these nontraditional data sources, like restaurant reservation information, hospital imagery and so on. EMBERS mines big data for trends rather than information on individuals.” For example, if EMBERS detects a mass cancellation of restaurant reservations and then hospital parking lots fill up, the system can predict that there is probably an outbreak of food poisoning or flu. And it’s incredibly accurate. EMBERS correctly predicted Hantavirus outbreaks in Argentina and Chile in 2013 and political uprisings in Brazil and Venezuela in 2013 and 2014. In fact, EMBERS is correct in its predictions about 90% of the time.

But EMBERS does have its limits. While it can mine public data to forecast major social events before they happen, it can’t predict spontaneous events – like crowd stampedes or terrorists. An increasing concern in an overcrowded and unstable world. Which is what Dr. Mubarak Shah and his team are trying to do: they are using video surveillance, AI, and complicated mathematical algorithms to track and predict the movements of dense crowds and the individuals in those crowds. “Crowd analysis is a very interesting area and very challenging, when you have thousands of people, it becomes very, very complicated.”

This is where A.I. comes in. It can help spot abnormal patterns in a crowd like bottlenecks. Take the Hajj pilgrimage in Saudi Arabia for example: in 2015, hundreds were crushed to death in a stampede. In the future, computers may be able to detect bottlenecks in real time and pilgrims can be diverted to uncrowded areas, thus avoiding stampedes and ultimately, saving lives, they say.

Another application Shah’s team is working on is to track individuals in crowds in real time. “We want to be able to track that person where the person enters, where he’s going and where exact sitting and so on.” Currently this kind of surveillance is done manually, but computers are beginning to be able to detect individuals acting suspiciously as they move through a crowd. “We want to be able to analyze the video in each camera. We detect people, we track, and we identify and so on.”

Using video surveillance and the networks of cameras available in major cities Shah’s team is working on using AI to track individuals from place to place. “You detect a person in one camera, then it disappears because it’s not visible. And after sometime it reappears in another camera maybe at a distance. Re-identification in the crowd is much, much harder because there are lots of people.”

Camera networks data mined for suspicious behavior could stop future bombings. But it isn’t easy and they aren’t there yet. There is so much data that needs to be annotated so that machines can understand human behavior. It can help the law enforcement so that they have eyes and they can make them more efficient and it can help solve crime and also help people. But there is a downside to this. How much privacy are we willing to give up to be safe? The implications of these technologies are vast. Perhaps it depends on how much you trust the people mining your data.

yogaesoteric

April 26, 2019