The Club of Rome and the Rise of the “Predictive Modelling” Mafia (1)

While many are now familiar with the manipulation of predictive modelling during the covid-19 crisis, a network of powerful Malthusians have used the same tactics for the better part of the last century in order to sell and impose their agenda.

While much propaganda has gone into convincing the world that eugenics disappeared with the defeat of Hitler in 1945, the reality is far removed from this popular fantasy.

It worth remembering the origins of cybernetics as a new “science of control” created during World War II by a nest of followers of Lord Bertrand Russell who had one mission as their goal. This mission was to shape the thinking of both the public as well as a new managerial elite class who would serve as instruments for a power they were incapable of understanding.

Also, consider the science of limits that was infused into the scientific community at the turn of the 20th century with the imposition of the assumption that humanity, the biosphere, and even the universe itself were closed systems, defined by the second law of thermodynamics (aka: entropy) and thus governed by the tendency towards decay, heat death and ever-decreasing potential for creative transformation. The field of cybernetics would also become the instrument used to advance a new global eugenics movement that later gave rise to transhumanism, an ideology which today sits at the heart of the 4th industrial revolution as well as the “Great Reset.”

In this article, we will evaluate how this sleight of hand occurred and how the consciousness of the population and governing class alike has been induced to participate in our own annihilation. Hopefully, in the course of this exercise, we will better appreciate what modes of thinking can still be revived in order to ensure a better future more becoming of a species of dignity.

Neil Ferguson’s Sleight of Hand

In May 2020, Imperial College’s Neil Ferguson was forced to resign from his post as the head of the UK’s Scientific Advisory Group for Emergencies (SAGE). The public reason given was Neil’s amorous escapades with a married woman during a draconian lockdown in the UK at the height of the first wave of hysterics. Neil should have also been removed from all his positions at the UN, WHO and Imperial College (most of which he continues to hold) and probably jailed for his role in knowingly committing fraud for two decades.

After all, Neil was not only personally responsible for the lockdowns that were imposed onto the people of the UK, Canada, much of Europe and the USA, but as the world’s most celebrated mathematical modeller, he had been the innovator of models used to justify crisis management and pandemic forecasting since at least December 2000.

It was at this time that Neil joined Imperial College after spending years at Oxford. He soon found himself advising the UK government on the new “foot and mouth” outbreak of 2001.

Neil went to work producing statistical models extrapolating linear trend lines into the future and came to the conclusion that over 150,000 people would be dead by the disease unless 11 million sheep and cattle were killed. Farms were promptly decimated by government decree and Neil was awarded an Order of the British Empire for his service to the cause by creating scarcity through a manufactured health crisis.

In 2002, Neil used his mathematical models to predict that 50,000 people would die of Mad Cow Disease which ended up seeing a total of only 177 deaths.

In 2005, Neil again aimed for the sky and predicted 150 million people would die of Bird Flu. His computer models missed the mark by 149,999,718 deaths when only 282 people died of the disease between 2003-2008.

In 2009, Neil’s models were used again by the UK government to predict 65,000 deaths due to Swine flu, which ended up killing about 457 people.

Despite his track record of embarrassing failures, Neil continued to find his star rising ever further into the stratosphere of science stardom. He soon became the Vice Dean of Imperial College’s Faculty of Medicine and a global expert of infectious diseases.

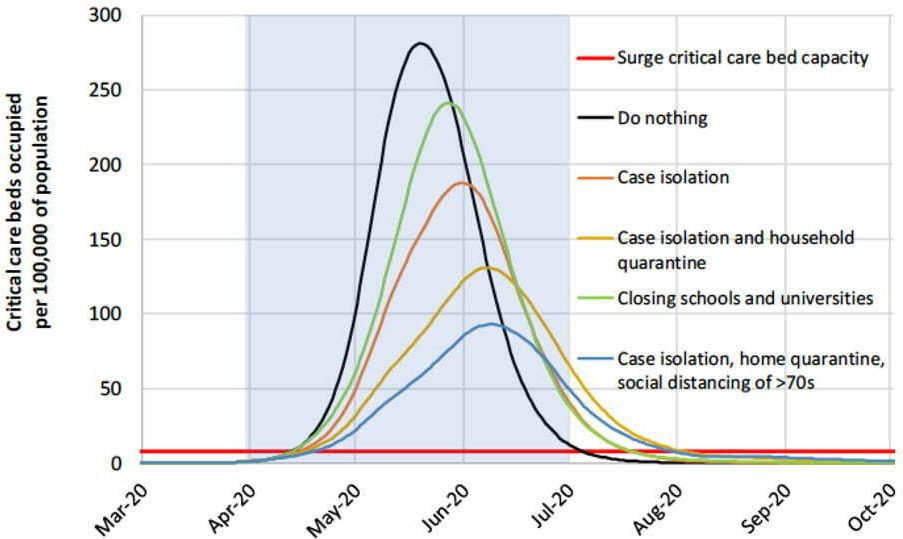

In 2019, he was assigned to head the World Health Organization’s Collaboration Center for Infectious Disease Modelling, a position he continues to hold to this day. It was at this time that his outdated models were used to “predict” 500,000 covid deaths in the UK and two million deaths in the USA unless total lockdowns were imposed in short order. Under the thin veneer of “science”, his word became law and much of the world fell into lockstep chanting “two weeks to flatten the curve.”

When Neil was pressed to make the code used to generate his models available to the public for scrutiny in late 2020 (after it was discovered that the code was over 13 years old), he refused to budge, eventually releasing a heavily redacted version which was all but useless for analysis.

A Google software engineer with 30 years experience writing (under a pseudonym) for The Daily Skeptic analyzed the redacted code and had this to say:

“It isn’t the code Ferguson ran to produce his famous Report. What’s been released on GitHub is a heavily modified derivative of it, after having been upgraded for over a month by a team from Microsoft and others. This revised codebase is split into multiple files for legibility and written in C++, whereas the original program was a single 15,000 line file that had been worked on for a decade (this is considered extremely poor practice). A request for the original code was made but ignored, and it will probably take some kind of legal compulsion to make them release it. Clearly, Imperial are too embarrassed by the state of it ever to release it of their own free will, which is unacceptable given that it was paid for by the taxpayers and belongs to them.”

Besides taxpayers, the author should have also included Bill Gates, as his foundation donated millions of dollars to Imperial College and Neil directly over the course of two decades, but we’ll forgive her for leaving that one out.

Monte Carlo Methods: How the Universe Became a Casino

The Daily Skeptic author went further to strike at the heart of Neil’s fraud when she nailed the underlying stochastic function at the heart of Neil’s predictive models. She writes:

“‘Stochastic’ is just a scientific-sounding word for ‘random.’ That’s not a problem if the randomness is intentional pseudo-randomness, i.e. the randomness is derived from a starting ‘seed’ which is iterated to produce the random numbers. Such randomness is often used in Monte Carlo techniques. It’s safe because the seed can be recorded and the same (pseudo-)random numbers produced from it in future.”

The author is right to identify the stochastic (aka; random) probability function at the heart of Neil’s models, and also correctly zeroes in on the blatant fudging of data and code to generate widely irrational outcomes that have zero connection to reality. However, being a Google programmer who had herself been processed in an “information theory” environment, which presumes randomness to be at the heart of all reality, the author makes a blundering error by presuming that Monte Carlo techniques would somehow be useful in making predictions of future crises. As we will soon see, Monte Carlo techniques are a core problem across all aspects of human thought and policy making.

The Monte Carlo technique itself got its name from information theorist John von Neumann and his colleague Stanlislaw Ulam who saw in the chance rolling of dice at casino roulette tables the key to analyze literally every non-linear system in existence – from atomic decay, to economic behavior, neuroscience, climatology, biology, and even theories of galaxy-formation. The Monte Carlo Casino in Morocco was the role model selected by von Neumann and Ulam to be used as the ideal blueprint that was assumed to shape all creation.

According to the official website for The Institute for Operations Research and the Management Sciences (INFORM), it didn’t take long for Monte Carlo Methods to be adopted by the RAND Corporation and the U.S. Air force. The INFORM site states:

“Although not invented at RAND, the powerful mathematical technique known as the Monte Carlo method received much of its early development at RAND in the course of research on a variety of Air Force and atomic weapon problems. RAND’s main contributions to Monte Carlo lie in the early development of two tools: generating random numbers, and the systematic development of variance-reduction techniques.”

RAND Corporation was the driving force for the adoption of cybernetics as the science of control within US foreign policy circles during the Cold War.

The person assigned to impose cybernetics and its associated “systems” planning into political practice was Lord President of the British Empire’s Scientific Secretariat Alexander King – acting here as Director General of Scientific Affairs of the Organization for Economic Coordination and Development (OECD) and advisor to NATO. His post 1968 role as co-founder of the Club of Rome will be discussed shortly.

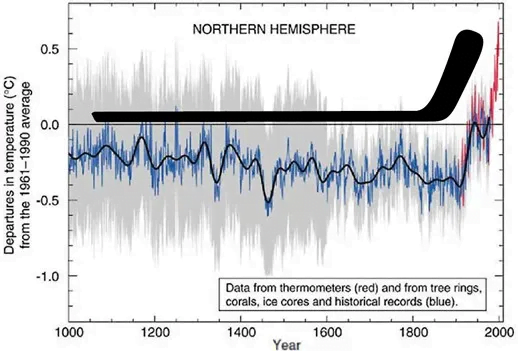

Whereas selling end-times scenarios to a gullible populace took the form of such Gates-funded stochastic models utilizing Monte Carlo techniques like those deployed by Neil Ferguson, the selling of end-times scenarios in the form of global warming have also used the exact same techniques, albeit for a slightly longer time frame. As Dr. Tim Ball proved in his successful lawsuits against the IPCC’s Michael Mann of “Hockey Stick” fame, those end-times global warming models have also used stochastic formulas (aka randomness functions) along with Monte Carlo techniques to consistently generate irrationally high heating curves in all climate models.

In an October 2004 article on Technology Review, author Richard Muller described how two Canadian scientists proved that this fraud underlies Mann’s Hockey Stick model, writing:

“Canadian scientists Stephen McIntyre and Ross McKitrick have uncovered a fundamental mathematical flaw in the computer program that was used to produce the hockey stick. This method of generating random data is called Monte Carlo analysis, after the famous casino, and it is widely used in statistical analysis to test procedures. When McIntyre and McKitrick fed these random data into the Mann procedure, out popped a hockey stick shape!”

Not coincidentally, these same stochastic models utilizing Monte Carlo techniques were also used in crafting economic models justifying the high-frequency trading ridden casino economy of the post-1971 era of myopic consumerism and deregulation.

The Club of Rome and World Problematique

The age of “predictive doomsday models” was given its most powerful appearance of “scientific respectability” through the efforts of an innocuous sounding organization called The Club of Rome.

Historian F. William Engdahl wrote of the Club’s origins:

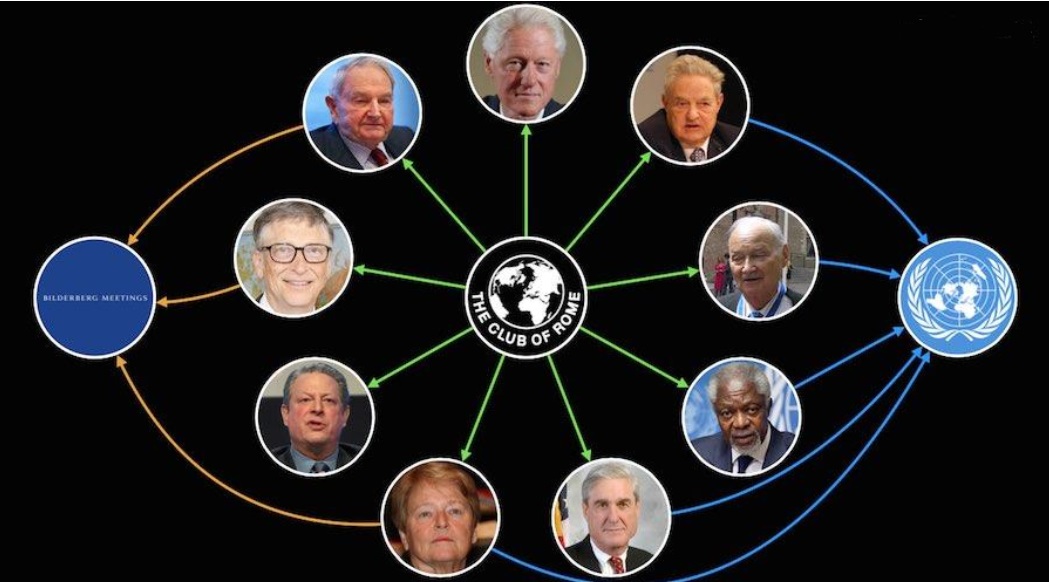

“In 1968 David Rockefeller founded a neo-Malthusian think tank, The Club of Rome, along with Aurelio Pecceiand Alexander King. Aurelio Peccei, was a senior manager of the Fiat car company, owned by the powerful Italian Agnelli family. Fiat’s Gianni Agnelli was an intimate friend of David Rockefeller and a member of the International Advisory Committee of Rockefeller’s Chase Manhattan Bank. Agnelli and David Rockefeller had been close friends since 1957. Agnelli became a founding member of David Rockefeller’s Trilateral Commission in 1973. Alexander King, head of the OECD Science Program was also a consultant to NATO.”

The think tank was founded by two self-professed Malthusians named Aurelio Peccei and OECD Director General for Scientific Affairs Sir Alexander King who promulgated a new ideology to the world: The age of scientific progress and industrial growth must stop in order for the world to reset its values under a new paradigm of zero-technological growth.

Both Peccei and King were also advocates of a new pseudoscience dubbed “World Problematique,” which was developed in the early 1960s and can simply be described as “the science of global problems.” Unlike other branches of science, solving problems facing humanity was not the concern for followers of Problematique. Its adherents asserted that the future could be known by first analyzing the infinite array of “problems” which humanity creates in modifying the environment.

To illustrate, an example: Thinking people desire to mitigate flood damage in a given area, so they build a dam. But then damage is done to the biodiversity of that region. Problem.

Another example: Thinking people wish to have better forms of energy and discover the structure of the atom, leading to nuclear power. Then, new problems arise like atomic bombs and nuclear waste. Problem.

A final example: A cure for malaria is discovered for a poor nation. Mortality rates drop but now population levels rise, putting stress on the environment.

This list can go on, literally forever.

An adherent to Problematique would fixate on every “problem” caused by humans naively attempting to solve problems. They would note that every human intervention leads to disequilibrium, and thus unpredictability. The Problematique-oriented consciousness would conclude that if the “problem that causes all problems” was eliminated, then a clean, pre-determined world of perfect stasis, and thus predictability, would ensue. Reporting on the growth of the Club of Rome’s World Problematique agenda in 1972, OECD Vice Chair, and Club of Rome member Hugo Thiemann told Europhysics News:

“In the past, research had been aimed at ‘understanding’ in the belief that it would help mankind. After a period of technological evolution based on this assumption, that belief was clearly not borne out by experience. Now, there was a serious conflict developing between planetary dimensions and population, so that physicists should change to consider future needs. Science policy should be guided by preservation of the biosphere.”

On page 118 of an autobiographical account of the Club of Rome entitled The First Global Revolution published in 1991, Sir Alexander King echoed this philosophy most candidly when he wrote:

“In searching for a new enemy to unite us, we came up with the idea that pollution, the threat of global warming, water shortages, famine and the like would fit the bill. All these dangers are caused by human intervention, and it is only through changed attitudes and behavior that they can be overcome. The real enemy then, is humanity itself.”

The Club of Rome quickly set up branches across the Western world with members ranging from select ideologues in the political, business, and scientific community who all agreed that society’s best form of governance was a scientific dictatorship. The Canadian branch of the organization was co-founded by the hyperactive Maurice Strong himself in 1970 alongside a nest of Fabians and Rhodes Scholars including Club of Rome devotee Pierre Trudeau. More on this will be said below.

One particularly interesting 1973 propaganda film was produced by ABC News and showcases the Club of Rome-MIT “innovation” on computer modelling. Describing the new modelling technology unveiled by MIT and the Club of Rome, the video’s narrator states:

“What it does for the first time in man’s history on the planet is to look at the world as one system. It shows that Earth cannot sustain present population and industrial growth for much more than a few decades.”

Read the second part of the article

Author: Matthew Ehret

yogaesoteric

January 29, 2023