The Shocking Truth About Skin Cancer: What You’re Not Told About the Sun

The story at a glance:

- Skin cancer is by far the most commonly diagnosed cancer in the United States. To prevent it, the public is constantly being urged to avoid the sun. But while the relatively benign types of skin cancer can be caused by excessive sun exposure, most skin cancer deaths are due to a lack of sunlight

- This is unfortunate because sunlight is arguably the most important nutrient for the human body, and if you avoid it, the mortality rate doubles and the risk of cancer increases significantly

- It’s fair to say that this dynamic is due to the fact that the dermatology profession (with the help of a top-notch PR firm) has turned itself into a skin cancer fighter, making it one of the highest-paying medical specialties anywhere. Unfortunately, despite the billions of dollars spent each year to fight the disease, the number of deaths from skin cancer has not decreased

Ever since I was a little kid, it struck me as odd that everyone was hysterically telling me to avoid sunlight and always wear sunscreen when we did anything outside – so I didn’t comply as best I could. As I got older, I noticed that the veins under my skin that were exposed to the sun not only felt good, but also expanded when I was out in the sun, which I took as a sign that the body was craving sunlight and wanting to absorb it into the bloodstream. I later learned that a pioneering researcher had discovered that people who wore glasses that blocked certain spectrums of light (e.g., most glass blocks UV light) from entering the most transparent part of the body experienced significant health changes that could be treated by giving them special glasses that did not block that spectrum.

Later, when I became a medical student (by which time I was familiar with the myriad benefits of sunlight), I was struck by how neurotic dermatologists are about avoiding sunlight. So I learned from my fellow students that dermatologists in northern latitudes (where sunlight exposure is so low that people suffer from seasonal affective disorder) tell their students to wear sunscreen and clothing that covers most of the body when indoors, and that every patient I treated there was lectured on the importance of avoiding sunlight. At that point, my perspective on the subject changed to “this crusade against the sun is definitely coming from the dermatologists” and “what on Earth is wrong with these people?” A few years ago, I learned the final piece of the puzzle through Dr. Robert Yoho and his book Butchered by Healthcare.

The monopolization of medicine

Over the course of my life, I have noticed three strange patterns in the medical industry:

- They will encourage healthy activities that people are unlikely to do (such as exercising five times a week).

- They will clearly promote unhealthy activities that make money for the industry (e.g. eating processed foods or taking a variety of unsafe and ineffective medicines).

- They will clearly attack beneficial activities that are easy to perform (e.g. yoga, sunbath, or eating eggs, raw milk products and butter).

To the best of my knowledge, much of this has its roots in the scandalous history of the American Medical Association (AMA) when George H. Simmons, MD, took over the organization in 1899 (the doctors were going out of business because their treatments were barbaric and didn’t work). He started a program to award the AMA seal of approval in exchange for manufacturers disclosing their ingredients and agreeing to advertise in many AMA publications (but they didn’t have to prove that their product was safe or effective). This maneuver was successful, resulting in a fivefold increase in advertising revenue and a ninefold increase in physician membership in just ten years.

At the same time, the AMA sought to monopolize the medical industry, for example by establishing a Council on General Medical Education (which essentially said that their method was the only credible way to practice medicine), which allowed them to become the national accrediting body for medical schools. This in turn allowed them to prevent the teaching of many competing models of medicine, such as homeopathy, chiropractic, naturopathy, and to a lesser extent osteopathy, since states often refused to admit graduates of schools with a poor AMA rating.

Likewise, Simmons (along with his successor Fishbein, who served from 1924 to 1950) set up a propaganda department in 1913 to attack all unconventional medical treatments and anyone who practiced them (whether a doctor or not). Fishbein was very good at what he did and was often able to organize massive media campaigns against anything he called “quackery” that were heard by millions of Americans (at a time when the country was much smaller).

Once Simmons and Fishbein created this monopoly, they quickly took advantage of it. This included blackmailing pharmaceutical companies into advertising with them, demanding the rights to a variety of treatments to sell to the AMA, and sending the FDA or FTC after anyone who refused to sell (which was proven in court in at least one case, as one of Fishbein’s compatriots thought what he was doing was wrong and testified against him). Because of this, many remarkable medical innovations have been successfully erased from history.

To illustrate that this is not just old history, consider how viciously and ridiculously the AMA attacked the use of Ivermectin to treat covid (since it was the covid cartel’s biggest competitor). Likewise, one of the paradigmatic moments for Pierre Kory was that after testifying before the Senate about Ivermectin, he was thrown into shock by the onslaught of media and medical journal campaigns from all directions trying to trash Ivermectin and destroy his and his colleagues’ reputations (e.g. they were fired and their papers that had already passed peer review were retracted). Two weeks later, he received an email from Professor William B. Grant (a vitamin D expert) saying: “Dear Dr. Korey, what they’re doing with ivermectin, they’ve been doing with vitamin D for decades,” and which included a 2017 paper detailing how the industry repeatedly tries to cover up inconvenient science.

It wasn’t long before Big Tobacco became the AMA’s biggest client, resulting in countless ads like this one being published by the AMA, which continued until Fishbein’s firing (at which point he became a highly paid lobbyist for the tobacco industry):

Because they were so mean, they often got people to dig into their pasts, which revealed how ruthless and sociopathic both Simmons and Fishbein were. Unfortunately, while I know from personal experience that this was the case (e.g., a friend of mine knew Fishbein’s secretary, and she testified that Fishbein was a truly horrible person who she regularly saw commit heinous acts, and I also knew people who knew the revolutionary healers that Fishbein targeted), I was never able to confirm many of the despicable allegations against Simmons because the book they all cite does not cite its sources, while the other books that make different but consistent allegations have poor sourcing.

The benefits of sunlight

One of the oldest proven therapies in medicine was sunbathing (it was one of the few remedies that actually had success in treating the 1918 flu, it was one of the most effective ways to treat tuberculosis before antibiotics, and it was used for a variety of other diseases as well). Because it is safe, effective, and freely available, unscrupulous persons seeking to monopolize the practice of medicine want to deny the public access to it. By the way: The success of sunbathing was the original inspiration for ultraviolet blood irradiation.

Because the war on sunlight has been so successful, many people are unaware of its benefits. For example, sunlight is crucial for psychic health. This is especially appreciated in the context of depression (e.g. seasonal affective disorder), but in reality the effects are much more far-reaching (e.g. unnatural light exposure disrupts the circadian rhythm).

This point became clear to me during my medical internship when, after a long period of night shifts under fluorescent lights, I noticed that I was becoming clinically depressed (something that never occurred to me before and led to a resident I was close to offering to prescribe antidepressants). I decided to do an experiment and stuck with it for a few more days, then went home and bathed under a full-spectrum lamp, after which I felt better almost immediately. I think my story is particularly important for healthcare workers, as many people in this field are forced to spend long periods of time under artificial light and their psychic health (and empathy) suffers greatly as a result. One example is a study on Chinese surgical nurses, which found that their psychic health is significantly worse than the general population and this deterioration is related to the lack of sunlight.

A large epidemiological study found that women with higher UVB exposure in the sun were half as likely to develop breast cancer as women with lower sun exposure, and men with higher sun exposure indoors were half as likely to develop fatal prostate cancer.

Remember, a 50 percent reduction in these cancers far exceeds the capabilities we have to treat or prevent these diseases.

A 20-year prospective study of 29,518 women in southern Sweden randomly selected average women of each age group without significant health problems, making it one of the best epidemiological studies possible. The study found that women who avoided the sun had a higher risk of death compared to women who regularly exposed themselves to sunlight: overall 60% higher risk of death, about 50% higher risk of death than in the moderate sun exposure group and about 130% higher risk of death than in the high sun exposure group.

To be clear, there are very few medical interventions that come anywhere close to this level of effectiveness.

The largest increase was in the risk of death from heart disease, the second largest increase was in the risk of death from all causes of death except heart disease and cancer, and the third largest increase was in deaths from cancer.

The greatest benefit was seen in smokers, so much so that nonsmokers who avoided the sun had the same risk of death as smokers who received sunlight.

I believe this and the cardiovascular benefits are due in large part to sunlight catalyzing the synthesis of nitric oxide (essential for healthy blood vessels) and sulfates (which line cells such as the endothelium and, when combined with infrared (or sunlight), form the liquid crystalline water essential for cardiovascular protection and function).

Considering all this, I’d say you need a really good reason to avoid the sun.

Skin cancer

According to the American Academy of Dermatology, skin cancer is the most common type of cancer in the United States. Current estimates suggest that one in five Americans will develop skin cancer in their lifetime. It is estimated that approximately 9,500 people in the United States are diagnosed with skin cancer each day.

Basal cell and squamous cell carcinomas, the two most common forms of skin cancer, are treatable if detected early and treated properly.

Because exposure to UV light is the most preventable risk factor for all skin cancers, the American Academy of Dermatology recommends that everyone avoid tanning beds and protect their skin outdoors by seeking shade, wearing protective clothing – including a long-sleeved shirt, pants, a wide-brimmed hat and sunglasses with UV protection – and applying a broad-spectrum, waterproof sunscreen with an SPF of 30 or higher to all skin not covered by clothing.

The same is true according to the Skin Cancer Foundation: every hour, more than 2 people die of skin cancer in the United States. That sounds pretty scary, so let’s break down exactly what that means.

Basal cell carcinoma

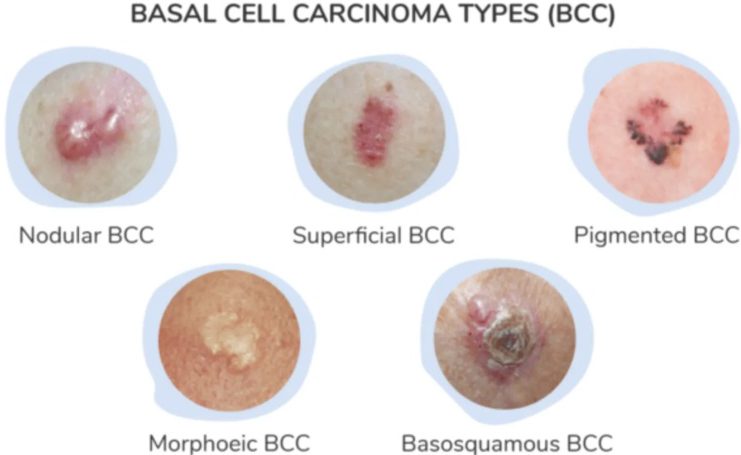

By far the most common type of skin cancer is basal cell carcinoma (which accounts for 80% of all skin cancers), which looks something like this:

The exact incidence of BCC varies widely, ranging from 14 to 10,000 cases per million people. In the United States, it is generally believed that about 2.64 million people develop BCC each year (out of about 4.32 million total cancer cases, since some people get more than one). The three main risk factors for BCC are excessive sun exposure, fair skin (which is more susceptible to too much sunlight penetrating the skin), and a family history of skin cancer. Because of this, the widely varying incidence of BCC depends largely on how much sunlight people are exposed to, and they typically occur in areas where they are frequently exposed to the sun (such as the face).

The most important aspect about BCC is that it is not very dangerous because it almost never metastasizes. Most sources say it has a 0% mortality rate. Instead, it is usually evaluated based on how likely it is to recur after removal (which ranges from 65% to 95% depending on the source).

In our view, one of the major shortcomings of the excision-based approach to skin cancer is that it does not address the underlying causes of the cancer and often results in skin cancer recurring and the need to cut off more and more skin (which becomes problematic the more it is removed). This is particularly problematic when a potentially fatal cancer recurs.

Squamous cell carcinoma

The second most common type of skin cancer, squamous cell carcinoma (SCC), looks like this:

Because it is also caused by sunlight, its incidence varies widely, ranging from 260 to 4,970 per million person-years, with an estimated 1.8 million cases occurring in the United States each year. BCC was once thought to be about four times more common than SCC, but that gap has now closed and the incidence is only twice as high. Unlike BCC, SCC can be dangerous because it metastasizes. If removed before it metastasizes, the survival rate is 99%, but if removed after metastasis, the survival rate drops to 56%. Because SCC is usually discovered before metastasis (3-9% of them metastasize in 1-2 years), the average survival rate for this cancer is about 95%, and it is estimated that about 2,000 people (although some estimates go as high as 8,000) die from SCC in the United States each year.

Because BCC and SCC are unlikely to be fatal, doctors are not required to report them like the other skin cancers, so there is no central database tracking the number of cases. Therefore, the figures for BCC and SCC are largely estimates.

Melanoma

It is estimated that melanoma occurs at a rate of 218 cases per million people in the United States each year (with risk varying by ethnicity). Although it accounts for only 1% of all skin cancer diagnoses, melanoma is responsible for most skin cancer deaths. Because survival rates improve significantly with early detection, there are numerous guides online to help you recognize the common signs of possible melanoma:

The five-year survival rate for melanoma depends on how far the melanoma has spread at the time of diagnosis (it ranges from 99% to 35%, with an average of 94%), so again, it’s important to detect it correctly – but there are also some cases that are aggressive and metastasize quickly (so they often go undetected in time), and these variants have a survival rate of 15-22.5%. Overall, this translates to just over 8,000 deaths per year in the United States.

Note: These melanoma variants likely skew the overall survival statistics for this cancer.

It is crucial to understand melanoma because although it is commonly believed to be linked to sunlight, this is not true. For example: a study of 528 melanoma patients found that those who had solar elastosis (a common skin change after excessive sun exposure) were 60% less likely to die from melanoma.

87% of all SCC cases occur in areas of the body exposed to high levels of sunlight, such as the face (which represents 6.2% of the body surface area in total), while 82.5% of BCC cases occur in these regions. Conversely, only 22% of melanomas occur in these regions. This suggests that SCC and BCC are related to sun exposure, but melanoma is not, and this is consistent with the fact that we consistently find them in areas that receive little sunlight.

Workers who work outdoors receive 3-10 times the annual UV dose of workers who work indoors, and yet they are less likely to develop malignant melanoma of the skin and have an odds ratio (risk) half that of their outdoor counterparts.

A 1997 meta-analysis of the available literature found that workers with significant occupational sun exposure were 14% less likely to develop melanoma.

So it’s quite frustrating that governments around the world keep giving advice to use more sunscreen, especially when melanoma rates are rising (in other words, exactly what we’re seeing with the covid vaccination drives).

Note: The chemicals in sunscreen can be claimed to cause skin cancer, and there is some evidence of this in certain cosmetic products on the market as well.

A (now forgotten) 1982 study of 274 women found that exposure to fluorescent light in the workplace increased the risk of developing malignant melanoma by 2.1 times, with this risk increasing with increasing exposure to fluorescent light, either due to the workplace exposure (1.8 times exposure for moderate-exposure jobs, 2.6 times exposure for high-exposure jobs) or due to duration of the job (i.e., 2.4 times the odds for 1-9 years of work, 2.8 times for 10-19 years of work, and 4.1 times for over 20 years of work).

Note: There is some evidence that these lamps also affect animals (e.g., this study showed that they dramatically reduce milk production).

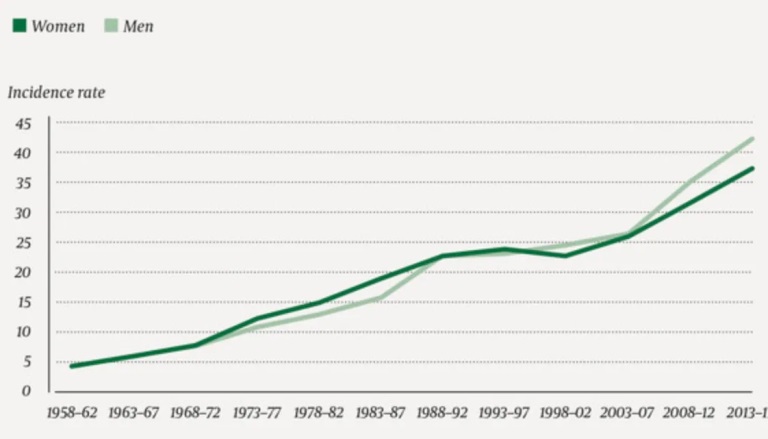

There is a significant increase in melanoma in many areas, which argues against sunlight being the main problem, as it has not changed significantly in recent decades. For example, consider these data from the Norwegian Cancer Registry on malignant melanoma:

Note: There is also some evidence linking sunlight exposure to increased melanoma risk, but it is rather conflicting, with some data showing a small reduction and others showing a small increase (e.g., this study found that sunlight exposure increased melanoma risk by about 20%). However, while a small increase in melanomas is seen, the opposite is true when it comes to the size of the melanomas (e.g., in one study, melanomas on the trunk were more than twice as large as those on the arms) or the likelihood of them being fatal (which is really what matters).

The great dermatology scam

If you consider the previous section, the following should be pretty clear: by far the most common skin cancer is not dangerous. The ones to worry about represent only a relatively small portion of the existing skin cancers.

Exposure to sunlight does not cause any dangerous types of cancer (except for SCC, which can be caused by excessive exposure, and which is not nearly as dangerous as the others).

Essentially, there is no way to justify a ban on sunlight to prevent skin cancer because the so-called “benefits” of this regulation are far outweighed by the harm. However, this contradiction is circumvented by a very clever linguistic trick – using a single term for everything, namely “skin cancer”, which then selectively picks up on the deadliness of melanoma, the frequency of BCC, and the sensitivity of BCC and SCC to sunlight.

Every year I play a game with the senior graduate students on a cancer specialist course run by the Royal College of Surgeons of England. I tell them that there are two potentially effective screening programs for prostate cancer, one of which reduces the chance of dying from the disease by 20 to 30 percent, while the other will save one life after 10,000 person-years of screening. What would you make of that as a consumer or as a public health official? Everyone votes for the first one; the two programs are identical, just packaged differently. Continuing to market screening as a relative risk reduction in breast cancer mortality is extremely disingenuous.

However, I need to stress that some skin cancers (such as many melanomas) require immediate removal. I would encourage you not to avoid dermatologists altogether, but to seek a second opinion from another dermatologist if you are unsure of what has been suggested to you, as there are also many excellent and ethical dermatologists practicing in this field.

The most sought-after field

A big part of medical education is giving medical students carrots and sticks (incentives and rewards) that they can receive if they work hard, submit to high levels of compliance, and demonstrate above-average levels of ability. This in turn motivates medical students to work very hard during school (e.g., giving up their social life), and continue working very hard for the rest of their career. One of the most important incentives is getting into a prestigious specialty, as these tend to be better paid.

Dermatology is generally considered the most sought-after specialty because:

- The period after medical training is relatively short (it is only four years).

- It offers a relatively relaxed work-life balance (e.g. you only work on weekdays during normal hours and can take one day off).

- It is relatively rare to deal with high-risk or difficult patients, so the stress in this area is very low.

Dermatology is one of the highest paying specialties. The average starting salary for a dermatologist is $400,000 per year, although many, such as Mohs surgeons, make at least $600,000 (and often far more). In comparison, general practitioners typically make around $220,000 annually.

With an average base salary of about $700,000 per year, neurological surgery is usually the highest paying specialty. While this is a lot, I think it is fair, because aside from the fact that this specialty is extremely demanding and nerve-wracking (e.g. many brain surgeries take 3 to 8 hours, and there are also many even longer surgeries where the surgeon needs to be extremely precise to avoid risking disaster and a major lawsuit), one should train to become a neurological surgeon for 7 years after medical school, and even longer (1-2 years) to specialize in certain aspects of neurological surgery.

It is remarkable that the dermatology profession has managed to reach such high income, and as a result, this specialty tends to attract the most competitive students who really want the great lifestyle and salary that a private dermatology practice can offer (even though during the application process, everyone usually states that they want to be in academic research because that is the only way to get in).

By the way, one of the biggest challenges in dermatology is the fact that accurately diagnosing all the different skin lesions that exist requires a high level of intelligence and training (which most doctors outside the field cannot do). Since there are many difficult skin lesions, it is important that there are doctors who can do this (although it is quite possible that in the coming years, AI diagnostic technologies will be used).

The changing face of dermatology

Not long ago, dermatologist was one of the least desirable professions, as much of their work essentially consisted of treating acne and pimples in the days before Accutane (which, unlike most pharmaceuticals, actually works – but is unfortunately incredibly toxic and has left several people I knew well permanently disabled).

A relatively unknown blog by dermatologist David J. Elpern, MD, has finally explained what occurred:

“Over the past 40 years, I have witnessed these changes in my field and am dismayed by the unwillingness of my colleagues to address them. This trend began in the early 1980s when the Academy of Dermatology (AAD) valued its members at over $2 million to hire a prominent New York advertising agency to increase the public’s appreciation of our field. The mad men recommended educating the public that dermatologists are skin cancer experts, not just pimple removers, and so free National Skin Cancer Screening Day was established.

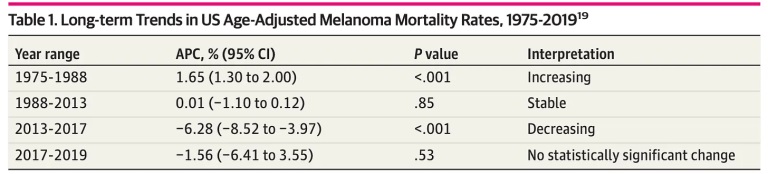

These screenings served to stoke public fear of skin cancer and led to scores of expensive, substandard procedures being performed to treat skin cancer and actinic keratoses (AKs). At the same time, pathologists expanded their definitions of melanoma, resulting in a diagnostic drift that misleadingly increased the incidence of melanoma while mortality remained at 1980 levels. At the same time, non-melanoma skin cancers are overtreated by legions of micrographic surgeons who often treat harmless skin cancers with unnecessarily aggressive, lucrative procedures.”

A 2021 journal article provides additional information on Dr. Elpern’s comments:

“Skin cancer screenings were introduced at the community level in the 1970s. The first nationwide public skin cancer screening program was launched by the American Academy of Dermatology in 1985 after the rising incidence and mortality rate of malignant melanoma began to attract attention in the early 1980s. During the program’s early years, President Ronald Reagan signed proclamations declaring ‘National Skin Cancer Prevention and Detection Week’ and ‘Melanoma/Skin Cancer Detection and Prevention Week for Older Americans,’ and the full-body skin examination became the gold standard for skin cancer screening.”

This article also points out that the American government has long had strong doubts about the value of these tests and that dermatology has been continually challenged to overcome this obstacle, which it has had to lobby to resolve.

In short, as has often occurred in America, a remarkably sophisticated PR campaign was launched to change society for the benefit of an industry.

I am fairly certain that some of the core components of this campaign were:

- The realization that skin cancer is by far the easiest cancer to diagnose (because you only have to see it).

- The demonization of the sun, as this allowed dermatologists to portray themselves as heroes and instil as much fear of the sun as possible – especially since a psychological investment they had to make to constantly apply sunscreen increased the likelihood of them going to their dermatologist.

- Creating a huge sales funnel by allowing them to perform a huge number of routine full-body skin exams (on otherwise healthy people) and thus biopsy or remove (excise) a huge pool of “potential cancers”.

Note: In addition to this sales funnel, dermatologists are paid to freeze any “precancerous” lesion they discover during an exam with liquid nitrogen (which takes only seconds and adds about $100 to the cost of the exam). Unfortunately, this is often touted as eliminating a precancerous lesion, but research shows that most go away on their own (55% within a year, 70% within 5 years) and very few turn into an SCC (0.6% in a year and 2.57% in 4 years), making the cost of this procedure hard to justify, especially since it is not without side effects.

- Let them be guided by the fear that the medical industry spreads around cancer to justify charging big money for questionable cancer prevention, and that every patient immediately agrees when they hear the dreaded “C”.

This principle was exploited by Lupron, a bad prostate cancer drug with a number of serious side effects that was then repurposed into a very lucrative drug used for a variety of unproven applications (e.g., to assist in sex reassignment surgery).

Specifically, they were charging a lot of money for the surgical removal of skin cancer. The main purpose of Mohs surgery is to have a much smaller incision (i.e. not cutting away more than is necessary), which can often be of great importance to the patient as large holes in the face can be devastating. This is achieved in part by stopping the operation halfway through and looking at what has been cut out under a microscope so that it can be determined whether all the cancer has been removed and no more needs to be cut away (whereas conventional surgery uses a larger margin to be safe).

The trick with Mohs surgery is that the fact that the doctor is doing both a surgical procedure and a pathology exam in the same visit allows them to bill for a variety of different services that add up quickly. The cost of Mohs surgery varies widely, but is usually at least a few thousand dollars. Unlike other surgeries, most of the money goes to the dermatologist.

So you can probably guess what occurred: the number of Mohs surgeries performed on Medicare beneficiaries in the United States increased by 700 percent between 1992 and 2009 (leading to it being ranked first on Medicare’s list of potentially misrated CPT codes), despite the fact that in many cases there was little evidence that Mohs surgery was superior to cheaper treatment options that include scraping, cutting, or even applying a cream to create a chemical burn. The big difference between these simpler treatments and the Mohs method is the price: hundreds of dollars versus $10,000 or even $20,000 for the Mohs method.

For most benign skin tumors, “the decision to use Mohs micrographic surgery probably reflects economic benefit to the provider rather than substantial clinical benefit to the patient,” wrote Dr. Robert Stern, a Harvard dermatologist, noting that the U.S. spent an estimated more than $2 billion on Mohs surgery in 2012, with wide variation in use. Even on sensitive sites such as the face and hands, it was used 53 percent of the time in Minnesota and only 12 percent in New Mexico. Dr. Stern estimates that nearly 2 percent of all Medicare beneficiaries received Mohs surgery that year.

Dr. Stern told Elisabeth Rosenthal that in 2012 he was on a panel convened by the dermatology societies to assess when Mohs surgery was actually appropriate (due to Medicare concerns about overuse of the procedure). Due to the procedural structure of the meeting, the panel ultimately voted in favor of 83% of the possible indications for Mohs surgery, resulting in (in Stern’s words) “many of us being surprised that many aspects that were initially quite controversial now seemed beneficial and unanimous. How could this occur? We were really uncomfortable. This was not a medical question, it was a question of trade.”

To show what this change in the guidelines meant: a total of 10,726 dermatologists were identified in the database, representing 1.2% of all health care professionals and 3% of total Medicare payments ($3.04 billion of approximately $100 billion), with dermatologists representing slightly less than 1% of physicians in the country. The median payment per dermatologist was $171,397. The average reimbursement for E/M was $77.59 per unit, while Mohs received an average reimbursement per procedure of $457.33 per unit. Of the dermatologists, 98.9% received reimbursement for E/M [general visits] and 19.9% received payments for Mohs. Total payments to dermatologists were highest for E/M ($756 million), followed by Mohs ($550 million) and destruction of premalignant lesions (cryosurgery) ($516 million), and then $289 million for biopsies. Compared to dermatologists with lower billing amounts, the dermatologists with the highest billing amounts received a higher share of payments for Mohs and flaps/grafts and a lower share for E/M. The top 15.9% of dermatologists received more than half of the total payments.

These numbers are for 2013 and have likely increased since then (I couldn’t find a more recent study, other than one article that mentions that 5.9 million skin biopsies were performed on Medicare Part B beneficiaries in 2015—a 55% increase from the previous year). Also, consider that Medicare typically accounts for about 40% of dermatologists’ total patient volume and about 30% of their total practice revenue (although I’ve seen revenue estimates between 30-60%), so this is only a fraction of what they actually make.

As you might imagine, this opportunity soon attracted the attention of more unscrupulous parties seeking to cash in on this windfall. This, in turn, prompted the New York Times to investigate the industry, and they found what they were looking for:

“Wall Street private equity firms had entered the market and were buying up dermatology practices and staffing them with nurses and physician assistants (who were much cheaper to hire than doctors), even though they advertised to the public that they would see a doctor. This was unfortunate in that these pseudo-dermatologists were performing more than twice as many biopsies for suspected skin cancer as dermatologists (who had gone from almost no biopsies billed to Medicare to over 15% over the course of ten years). Likewise, actual cancers were often missed or lesions were misdiagnosed that any dermatologist could tell were not cancer.”

While dermatologic surgeries usually turn out quite well cosmetically when performed by a competent dermatologist on a patient who is not too old, we often find that the scars from dermatologic surgeries can cause chronic problems (e.g. pain) that need to be addressed with either prolotherapy or neural therapy. Typically, this is most common with surgeries on the face, due to either the innate sensitivity of this high nerve region or because the skin does not like being stretched and then sutured (which is typically required with Mohs).

To conclude this section, I would like to mention that what always bothered me about some of the dermatologist offices I have worked in is how “salesy” they seemed, as they repeated the same scripts over and over to get patients through the skin cancer sales funnel, while at the same time the dermatologists were very concerned about making everything and everyone look as beautiful as possible, but only under the knife and for fat bills (along with numerous advertisements designed to appeal to their patients’ body insecurities).

Changes in skin cancer

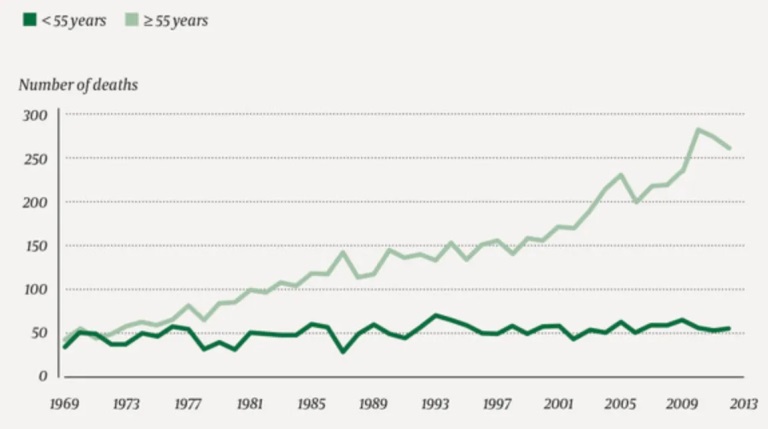

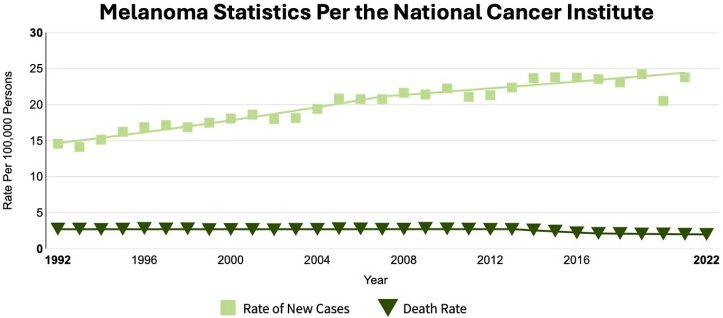

Considering how much money is spent on fighting skin cancer, you would expect some results. Unfortunately, as with many other aspects of the cancer industry, that is not the case. Instead, we are continually finding that more (previously benign) cancers are being diagnosed, but for the most part, the mortality rate does not change significantly.

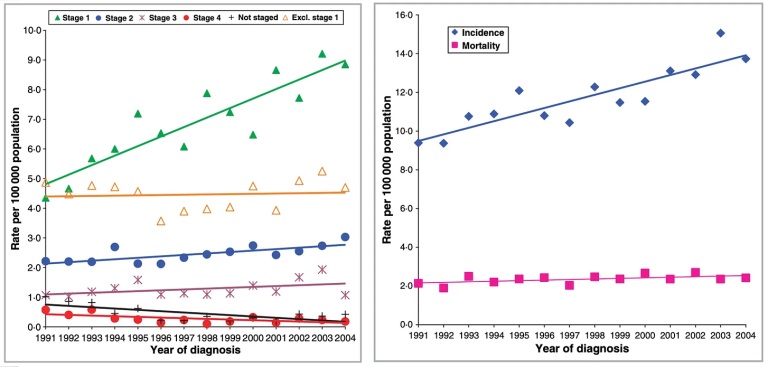

The best evidence for this comes from a study which looked at what type of malignant melanoma was actually being biopsied, and found that the increase in “skin cancer” was almost entirely stage 1 melanomas, which cause little problem:

This study, in turn, illustrates exactly what the result of our war on skin cancer is:

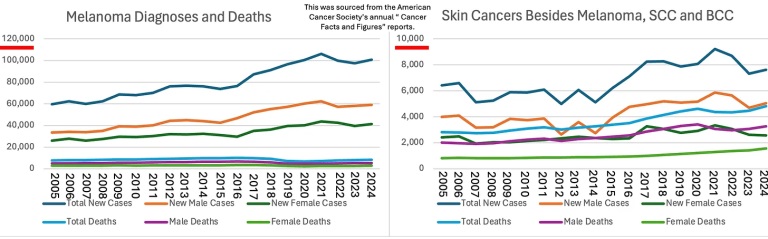

Since many suspected that the covid vaccines might lead to an increase in melanoma (or other skin cancers), and I couldn’t find the relevant statistics online, I decided to create them by compiling all the available annual reports from the American Cancer Society into a few charts:

Dealing with skin cancer and sunlight

The main purpose of this article was to encourage each of you because I really don’t think it’s appropriate for dermatologists to exploit patients’ fear of cancer to push this lucrative business model.

However, I realize that this article also raises some obvious questions, such as:

- Are there less invasive alternatives to skin cancer surgery?

- What is the best way to stay safe in the sun?

- What actually causes skin cancer and how can it be prevented?

Author: A Midwestern Doctor

yogaesoteric

May 31, 2024