The Party Breaker: Silicon Valley Is Addicting Us to Our Phones

By Bianca Bosker, The Atlantic

Tristan Harris believes Silicon Valley is addicting us to our phones. He’s determined to make it stop.

On an evening in San Francisco, Tristan Harris, a former product philosopher at Google, took a name tag from a man in pajamas called “Honey Bear” and wrote down his pseudonym for the night: “Presence”.

Harris had just arrived at Unplug SF, a “digital detox experiment” held in honor of the National Day of Unplugging, and the organizers had banned real names.

Also outlawed: clocks, “w-talk” (work talk), and “WMDs” (the planners’ loaded shorthand for wireless mobile devices). Harris, a slight 32-year-old with copper hair and a tidy beard, surrendered his iPhone, a device he considers so addictive that he’s called it “a slot machine in my pocket”.

He keeps the background set to an image of Scrabble tiles spelling out the words face down, a reminder of the device’s optimal position.

I followed him into a spacious venue packed with nearly 400 people painting faces, filling in coloring books, and wrapping yarn around chopsticks. Despite the cheerful summer-camp atmosphere, the event was a reminder of the binary choice facing smartphone owners, who, according to one study, consult their device 150 times a day: leave the WMD on and deal with relentless prompts compelling them to check its screen, or else completely disconnect. “It doesn’t have to be the all-or-nothing choice,” Harris told me after taking in the arts-and-crafts scene: “That’s a design failure”.

Harris is the closest thing Silicon Valley has to a conscience. As the cofounder of Time Well Spent, an advocacy group, he is trying to bring moral integrity to software design: essentially, to persuade the tech world to help us disengage more easily from its devices.

While some blame our collective tech addiction on personal failings, like weak willpower, Harris points a finger at the software itself. That itch to glance at our phone is a natural reaction to apps and websites engineered to get us scrolling as frequently as possible.

The attention economy, which showers profits on companies that seize our focus, has kicked off what Harris calls a “race to the bottom of the brain stem”.

“You could say that it’s my responsibility” to exert self-control when it comes to digital usage, he explains, “but that’s not acknowledging that there’s a thousand people on the other side of the screen whose job is to break down whatever responsibility I can maintain.”

In short, we’ve lost control of our relationship with technology because technology has become better at controlling us.

A “Hippocratic oath” for software designers would stop the exploitation of people’s psychological vulnerabilities.

Under the auspices of Time Well Spent, Harris is leading a movement to change the fundamentals of software design. He is rallying product designers to adopt a “Hippocratic oath” for software that, he explains, would check the practice of “Exposing people’s psychological vulnerabilities” and restore “agency” to users. “There needs to be new ratings, new criteria, new design standards, new certification standards” he says. “There is a way to design based not on addiction.”

Joe Edelman ‒ who did much of the research informing Time Well Spent’s vision and is the co-director of a think tank advocating for more-respectful software design ‒ likens Harris to a tech-focused Ralph Nader.

Other people, including Adam Alter, a marketing professor at NYU, have championed theses similar to Harris’s; but according to Josh Elman, a Silicon Valley veteran with the venture-capital firm Greylock Partners, Harris is “the first putting it together in this way” – articulating the problem, its societal cost, and ideas for tackling it.

Elman compares the tech industry to Big Tobacco before the link between cigarettes and cancer was established:

Keen to give customers more of what they want, yet simultaneously inflicting collateral damage on their lives.

Harris, Elman says, is offering Silicon Valley a chance to reevaluate before more-immersive technology, like virtual reality, pushes us beyond a point of no return.

All this talk of hacking human psychology could sound paranoid, if Harris had not witnessed the manipulation firsthand. Raised in the Bay Area by a single mother employed as an advocate for injured workers, Harris spent his childhood creating simple software for Macintosh computers and writing fan mail to Steve Wozniak, a co-founder of Apple.

He studied computer science at Stanford while interning at Apple, then embarked on a master’s degree at Stanford, where he joined the Persuasive Technology Lab.

Run by the experimental psychologist B. J. Fogg, the lab has earned a cult-like following among entrepreneurs hoping to master Fogg’s principles of “behavior design” – a euphemism for what sometimes amounts to building software that nudges us toward the habits a company seeks to instill. (One of Instagram’s co-founders is an alumnus.)

In Fogg’s course, Harris studied the psychology of behavior change, such as how clicker training for dogs, among other methods of conditioning, can inspire products for people.

For example, rewarding someone with an instantaneous “like” after they post a photo can reinforce the action, and potentially shift it from an occasional to a daily activity.

Harris learned that the most-successful sites and apps hook us by tapping into deep-seated human needs. When LinkedIn launched, for instance, it created a hub-and-spoke icon to visually represent the size of each user’s network.

That triggered people’s innate craving for social approval and, in turn, got them scrambling to connect.

“Even though at the time there was nothing useful you could do with LinkedIn, that simple icon had a powerful effect in tapping into people’s desire not to look like losers” Fogg told me.

Harris began to see that technology is not, as so many engineers claim, a neutral tool; rather, it’s capable of coaxing us to act in certain ways. And he was troubled that out of 10 sessions in Fogg’s course, only one addressed the ethics of these persuasive tactics. (Fogg says that topic is “woven throughout” the curriculum.)

Harris dropped out of the master’s program to launch a start-up that installed explanatory pop-ups across thousands of sites, including The New York Times.

It was his first direct exposure to the war being waged for our time, and Harris felt torn between his company’s social mission, which was to spark curiosity by making facts easily accessible, and pressure from publishers to corral users into spending more and more minutes on their sites.

Though Harris insists he steered clear of persuasive tactics, he grew more familiar with how they were applied.

He came to conceive of them as “hijacking techniques” ‒ the digital version of pumping sugar, salt, and fat into junk food in order to induce bingeing.

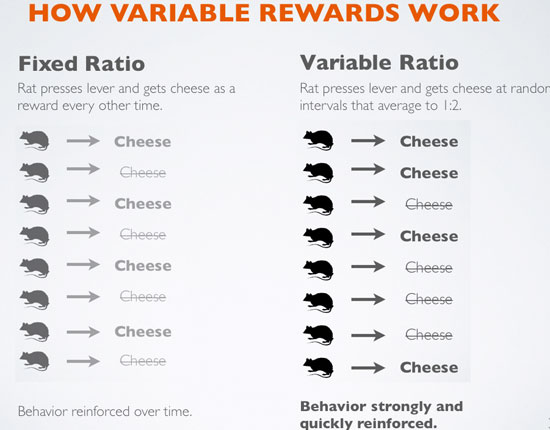

McDonald’s hooks us by appealing to our bodies’ craving for certain flavors; Facebook, Instagram, and Twitter hook us by delivering what psychologists call “variable rewards”.

Messages, photos, and “likes” appear on no set schedule, so we check for them compulsively, never sure when we’ll receive that dopamine-activating prize. (Delivering rewards at random has been proved to quickly and strongly reinforce behavior.)

Checking that Facebook friend request will take only a few seconds, we reason, though research shows that when interrupted, people take an average of 25 minutes to return to their original task.

Sites foster a sort of distracted lingering partly by lumping multiple services together. To answer the friend request, we’ll pass by the News Feed, where pictures and auto-play videos seduce us into scrolling through an infinite stream of posts ‒ what Harris calls a “bottomless bowl” referring to a study that found people eat 73 percent more soup out of self-refilling bowls than out of regular ones, without realizing they’ve consumed extra.

The “friend request” tab will nudge us to add even more contacts by suggesting “people you may know” and in a split second, our unconscious impulses cause the cycle to continue: once we send the friend request, an alert appears on the recipient’s phone in bright red ‒ a “trigger” color, Harris says, more likely than some other hues to make people click – and because seeing our name taps into a hardwired sense of social obligation, she will drop everything to answer.

In the end, he says, companies “stand back watching as a billion people run around like chickens with their heads cut off, aimlessly, responding to each other and feeling indebted to each other”.

A Facebook spokesperson told me the social network focuses on maximizing the quality of the experience – not the time its users spend on the site ‒ and surveys its users daily to gauge success.

In response to this feedback, Facebook tweaked its News Feed algorithm to punish clickbait ‒ stories with sensationalist headlines designed to attract readers. (LinkedIn and Instagram declined requests for comment. Twitter did not reply to multiple queries.)

Even so, a niche group of consultants has emerged to teach companies how to make their services irresistible. One such specialist is Nir Eyal, the author of Hooked: How to Build Habit-Forming Products, who has lectured or consulted for firms such as LinkedIn and Instagram.

A blog post he wrote touting the value of variable rewards is titled Want to Hook Your Users? Drive Them Crazy.

While asserting that companies are morally obligated to help those genuinely addicted to their services, Eyal contends that social media merely satisfies our appetite for entertainment in the same way TV or novels do, and that the latest technology tends to get vilified simply because it’s new, but eventually people find balance.

“Saying ‘Don’t use these techniques’ is essentially saying ‘Don’t make your products fun to use’. That’s silly,” Eyal told me.

“With every new technology, the older generation says ‘Kids these days are using too much of this and too much of that and it’s melting their brains.’ And it turns out that what we’ve always done is to adapt.”

Google acquired Harris’s company in 2011, and he ended up working on Gmail’s Inbox app. (He’s quick to note that while he was there, it was never an explicit goal to increase time spent on Gmail.)

A year into his tenure, Harris grew concerned about the failure to consider how seemingly minor design choices, such as having phones buzz with each new email, would cascade into billions of interruptions. His team dedicated months to fine-tuning the aesthetics of the Gmail app with the aim of building a more “delightful” email experience.

But to him that missed the bigger picture: instead of trying to improve email, why not ask how email could improve our lives ‒ or, for that matter, whether each design decision was making our lives worse?

Harris gives off a preppy-hippie vibe that allows him to move comfortably between Palo Alto boardrooms and device-free retreats.

Six months after attending Burning Man in the Nevada desert, a trip Harris says helped him with: “Waking up and questioning my own beliefs”. He quietly released A Call to Minimize Distraction & Respect Users’ Attention, a 144-page Google Slides presentation.

In it, he declared, “Never before in history have the decisions of a handful of designers (mostly men, white, living in SF, aged 25-35) working at 3 companies” ‒ Google, Apple, and Facebook – “had so much impact on how millions of people around the world spend their attention… We should feel an enormous responsibility to get this right.”

Although Harris sent the presentation to just 10 of his closest colleagues, it quickly spread to more than 5,000 Google employees, including then-CEO Larry Page, who discussed it with Harris in a meeting a year later.

“It sparked something” recalls Mamie Rheingold, a former Google staffer who organized an internal Q&A session with Harris at the company’s headquarters. “He did successfully create a dialogue and open conversation about this in the company.”

Harris parlayed his presentation into a position as product philosopher, which involved researching ways Google could adopt ethical design.

But he says he came up against “inertia”. Product road maps had to be followed, and fixing tools that were obviously broken took precedence over systematically rethinking services. Chris Messina, then a designer at Google, says little changed following the release of Harris’s slides: “It was one of those things where there’s a lot of head nods, and then people go back to work.”

Harris told me some colleagues misinterpreted his message, thinking that he was proposing banning people from social media, or that the solution was simply sending fewer notifications. (Google declined to comment.)

Harris left the company to push for change more widely, buoyed by a growing network of supporters that includes the MIT professor Sherry Turkle; Meetup’s CEO, Scott Heiferman; and Justin Rosenstein, a co-inventor of the “like” button; along with fed-up users and concerned employees across the industry.

“Pretty much every big company that’s manipulating users has been very interested in our work,” says Joe Edelman, who has spent the past five years trading ideas and leading workshops with Harris.

Through Time Well Spent, his advocacy group, Harris hopes to mobilize support for what he likens to an organic-food movement, but for software: an alternative built around core values, chief of which is helping us spend our time well, instead of demanding more of it.

Thus far, Time Well Spent is more a label for his crusade ‒ and a vision he hopes others will embrace ‒ than a full-blown organization. (Harris, its sole employee, self-funds it.)

Yet he’s amassed a network of volunteers keen to get involved, thanks in part to his frequent cameos on the thought-leader speaker circuit, including talks at Harvard’s Berkman Klein Center for Internet & Society; the O’Reilly Design Conference; an internal meeting of Facebook designers; and a TEDx event, whose video has been viewed more than 1 million times online.

Tim O’Reilly, the founder of O’Reilly Media and an early web pioneer, told me Harris’s ideas are: “Definitely something that people who are influential are listening to and thinking about.” Even Fogg, who stopped wearing his Apple Watch because its incessant notifications annoyed him, is a fan of Harris’s work: “It’s a brave thing to do and a hard thing to do.”

At Unplug SF, a burly man calling himself “Haus” enveloped Harris in a bear hug. “This is the antidote!” Haus cheered. “This is the antivenom!” All evening, I watched people pull Harris aside to say “hello”, or ask to schedule a meeting. Someone cornered Harris to tell him about his internet “sabbatical” but Harris cut him off.

Harris admits that researching the ways our time gets hijacked has made him slightly obsessive about evaluating what counts as “time well spent” in his own life.

The hypnosis class Harris went to before meeting me ‒ because he suspects the passive state we enter while scrolling through feeds is similar to being hypnotized ‒ was not time well spent.

The slow-moving course, he told me, was “low bit rate” ‒ a technical term for data-transfer speeds.

Attending the digital detox? Time very well spent. He was delighted to get swept up in a mass game of rock-paper-scissors, where a series of one-on-one elimination contests culminated in an onstage showdown between “Joe” and “Moonlight”.

Harris has a tendency to immerse himself in a single activity at a time. In conversation, he rarely breaks eye contact and will occasionally rest a hand on his interlocutor’s arm, as if to keep both parties present in the moment.

He got so wrapped up in our chat one afternoon that he attempted to get into an idling Uber that was not an Uber at all, but a car that had paused at a stop sign.

An accordion player and tango dancer in his spare time who pairs plaid shirts with a bracelet that has presence stamped into a silver charm, Harris gives off a preppy-hippie vibe that allows him to move comfortably between Palo Alto boardrooms and device-free retreats.

In that sense, he had a great deal in common with the other Unplug SF attendees, many of whom belong to a new class of tech elites “waking up” to their industry’s unwelcome side effects.

For many entrepreneurs, this epiphany has come with age, children, and the peace of mind of having several million in the bank, says Soren Gordhamer, the creator of Wisdom 2.0, a conference series about maintaining “presence and purpose” in the digital age.

“They feel guilty,” Gordhamer says. “They are realizing they built this thing that’s so addictive.”

I asked Harris whether he felt guilty about having joined Google, which has inserted its technology into our pockets, glasses, watches, and cars.

He didn’t. He acknowledged that some divisions, such as YouTube, benefit from coaxing us to stare at our screens. But he justified his decision to work there with the logic that since Google controls three interfaces through which millions engage with technology ‒ Gmail, Android, and Chrome ‒ the company was the “first line of defense”.

Getting Google to rethink those products, as he’d attempted to do, had the potential to transform our online experience.

At a restaurant around the corner from Unplug SF, Harris demonstrated an alternative way of interacting with WMDs, based on his own self-defense tactics.

Certain tips were intuitive: he’s “almost militaristic about turning off notifications” on his iPhone, and he set a custom vibration pattern for text messages, so he can feel the difference between an automated alert and a human’s words.

Other tips drew on Harris’s study of psychology. Since merely glimpsing an app’s icon will.

“Trigger this whole set of sensations and thoughts,” he pruned the first screen of his phone to include only apps, such as Uber and Google Maps, that perform a single function and thus run a low risk of “bottomless bowling”.

He tried to make his phone look minimalist: taking a cue from a Google experiment that cut employees’ M&M snacking by moving the candy from clear to opaque containers, he buried colorful icons ‒ along with time-sucking apps like Gmail and WhatsApp – inside folders on the second page of his iPhone.

As a result, that screen was practically grayscale. Harris launches apps by using what he calls the phone’s “consciousness filter” ‒ typing Instagram, say, into its search bar – which reduces impulsive tapping.

For similar reasons, Harris keeps a Post-it on his laptop with this instruction: “Do not open without intention”.

His approach seems to have worked. I’m usually quick to be annoyed by friends reaching for their phones, but next to Harris, I felt like an addict. Wary of being judged, I made a point not to check my iPhone unless he checked his first, but he went so long without peeking that I started getting antsy. Harris assured me that I was far from an exception.

“Our generation relies on our phones for our moment-to-moment choices about who we’re hanging out with, what we should be thinking about, who we owe a response to, and what’s important in our lives,” he said.

“And if that’s the thing that you’ll outsource your thoughts to, forget the brain implant. That is the brain implant. You refer to it all the time.”

Curious to hear more about Harris’s plan for tackling manipulative software, I tagged along one morning to his meeting with two entrepreneurs eager to incorporate Time Well Spent values into their start-up.

Harris, energized from a yoga class, met me at a bakery not far from the “intentional community house” where he lives with a dozen or so housemates.

We were joined by Micha Mikailian and Johnny Chan, the co-founders of an ad blocker, Intently, that replaces advertising with “intentions” reminding people to “Follow Your Bliss” or “Be Present”. Previously, they’d run a marketing and advertising agency.

“One day I was in a meditation practice. I just got the vision for Intently” said Mikailian, who sported a chunky turquoise bracelet and a man bun.

“It fully aligned with my purpose” said Chan.

They were interested in learning what it would take to integrate ethical design. Coordinating loosely with Joe Edelman, Harris is developing a code of conduct ‒ the Hippocratic oath for software designers – and a playbook of best practices that can guide start-ups and corporations toward products that “treat people with respect”.

Having companies rethink the metrics by which they measure success would be a start. “You have to imagine: What are the concrete benefits landed in space and in time in a person’s life?” Harris said, coaching Mikailian and Chan.

Harris hopes that companies will offer a healthier alternative to the current diet of tech junk food – perhaps at a premium price.

At his speaking engagements, Harris has presented prototype products that embody other principles of ethical design. He argues that technology should help us set boundaries.

This could be achieved by, for example, an inbox that asks how much time we want to dedicate to email, then gently reminds us when we’ve exceeded our quota. Technology should give us the ability to see where our time goes, so we can make informed decisions – imagine your phone alerting you when you’ve unlocked it for the 14th time in an hour.

And technology should help us meet our goals, give us control over our relationships, and enable us to disengage without anxiety. Harris has demoed a hypothetical “focus mode” for Gmail that would pause incoming messages until someone has finished concentrating on a task, while allowing interruptions in case of an emergency. (Slack has implemented a similar feature.)

Harris hopes to create a Time Well Spent certification – akin to the LEED (Leadership in Energy and Environmental Design) seal or an organic label ‒ that would designate software made with those values in mind.

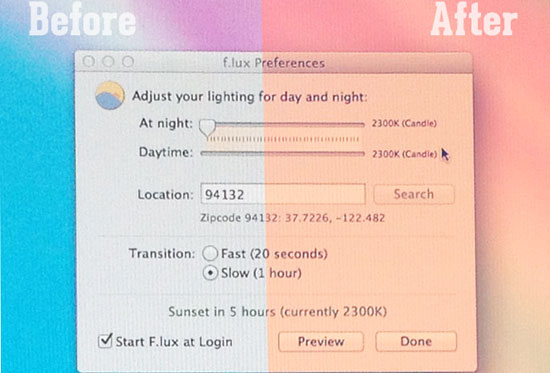

He already has a shortlist of apps that he endorses as early exemplars of the ethos, such as Pocket, Calendly, and f.lux, which, respectively, saves articles for future reading, lets people book empty slots on an individual’s calendar to streamline the process of scheduling meetings, and aims to improve sleep quality by adding a pinkish cast to the circadian-rhythm-disrupting blue light of screens. Intently could potentially join this coalition, he volunteered.

As a first step toward identifying other services that could qualify, Harris has experimented with creating software that would capture how many hours someone devotes weekly to each app on her phone, then ask her which ones were worthwhile.

The data could be compiled to create a leaderboard that shames apps that addict but fail to satisfy. Edelman has released a related tool for websites, called Hindsight. “We have to change what it means to win,” Harris says.

The biggest obstacle to incorporating ethical design and “agency” is not technical complexity. According to Harris, it’s a “will thing”. And on that front, even his supporters worry that the culture of Silicon Valley may be inherently at odds with anything that undermines engagement or growth.

“This is not the place where people tend to want to slow down and be deliberate about their actions and how their actions impact others,” says Jason Fried, who has spent the past 12 years running Basecamp, a project-management tool.

“They want to make things more sugary and more tasty, and pull you in, and justify billions of dollars of valuation and hundreds of millions of dollars [in] venture capital funds.”

Rather than dismantling the entire attention economy, Harris hopes that companies will, at the very least, create a healthier alternative to the current diet of tech junk food.

He recognizes that this shift would require reevaluating entrenched business models so success no longer hinges on claiming attention and time.

As with organic vegetables, it’s possible that the first generation of Time Well Spent software might be available at a premium price, to make up for lost advertising dollars.

“Would you pay $7 a month for a version of Facebook that was built entirely to empower you to live your life?” Harris says. “I think a lot of people would pay for that.”

Like splurging on grass-fed beef, paying for services that are available for free and disconnecting for days (even hours) at a time are luxuries that few but the reasonably well-off can afford.

I asked Harris whether this risked stratifying tech consumption, such that the privileged escape the mental hijacking and everyone else remains subjected to it. “It creates a new inequality. It does.”

Harris admitted. But he countered that if his movement gains steam, broader change could occur, much in the way Walmart now stocks organic produce.

Currently, though, the trend is toward deeper manipulation in ever more sophisticated forms. Harris fears that Snapchat’s tactics for hooking users make Facebook’s look quaint.

Facebook automatically tells a message’s sender when the recipient reads the note ‒ a design choice that, per Fogg’s logic, activates our hardwired sense of social reciprocity and encourages the recipient to respond.

Snapchat ups the ante: unless the default settings are changed, users are informed the instant a friend begins typing a message to them – which effectively makes it a faux pas not to finish a message you start.

Harris worries that the app’s Snapstreak feature, which displays how many days in a row two friends have snapped each other and rewards their loyalty with an emoji, seems to have been pulled straight from Fogg’s inventory of persuasive tactics.

Research shared with Harris by Emily Weinstein, a Harvard doctoral candidate, shows that Snapstreak is driving some teenagers nuts ‒ to the point that before going on vacation, they give friends their log-in information and beg them to snap in their stead.

“To be honest, it made me sick to my stomach to hear these anecdotes,” Harris told me.

Harris thinks his best shot at improving the status quo is to get users riled up about the ways they’re being manipulated, then create a groundswell of support for technology that respects people’s agency ‒ something akin to the privacy outcry that prodded companies to roll out personal-information protections.

While Harris’s experience at Google convinced him that users must demand change for it to happen, Edelman suggests that the incentive to adapt can originate within the industry, as engineers become reluctant to build products they view as unethical and companies face a brain drain.

The more people recognize the repercussions of tech firms’ persuasive tactics, the more working there “becomes uncool” he says, a view I heard echoed by others in his field. “You can really burn through engineers hard.”

There is arguably an element of hypocrisy to the enlightened image that Silicon Valley projects, especially with its recent embrace of “mindfulness”.

Companies like Google and Facebook, which have offered mindfulness training and meditation spaces for their employees, position themselves as corporate leaders in this movement.

Yet this emphasis on mindfulness and consciousness, which has extended far beyond the tech world, puts the burden on users to train their focus, without acknowledging that the devices in their hands are engineered to chip away at their concentration.

It’s like telling people to get healthy by exercising more, then offering the choice between a Big Mac and a Quarter Pounder when they sit down for a meal.

And being aware of software’s seductive power does not mean being immune to its influence.

One evening, just as we were about to part ways for the night, Harris stood talking by his car when his phone flashed with a new text message. He glanced down at the screen and interrupted himself mid-sentence.

“Oh!” he announced, more to his phone than to me, and mumbled something about what a coincidence it was that the person texting him knew his friend. He looked back up sheepishly. “That’s a great example” he said, waving his phone. “I had no control over the process.”

yogaesoteric

December 1, 2017